當我們使用 Prometheus 來監控 Kubernetes 集群的時候,kube-state-metrics(KSM) 基本屬于一個必備組件,它通過 Watch APIServer 來生成資源對象的狀態指標,它并不會關注單個 Kubernetes 組件的健康狀況,而是關注各種資源對象的健康狀態,比如 Deployment、Node、Pod、Ingress、Job、Service 等等,每種資源對象中包含了需要指標,我們可以在官方文檔 https://github.com/kubernetes/kube-state-metrics/tree/main/docs 處進行查看。

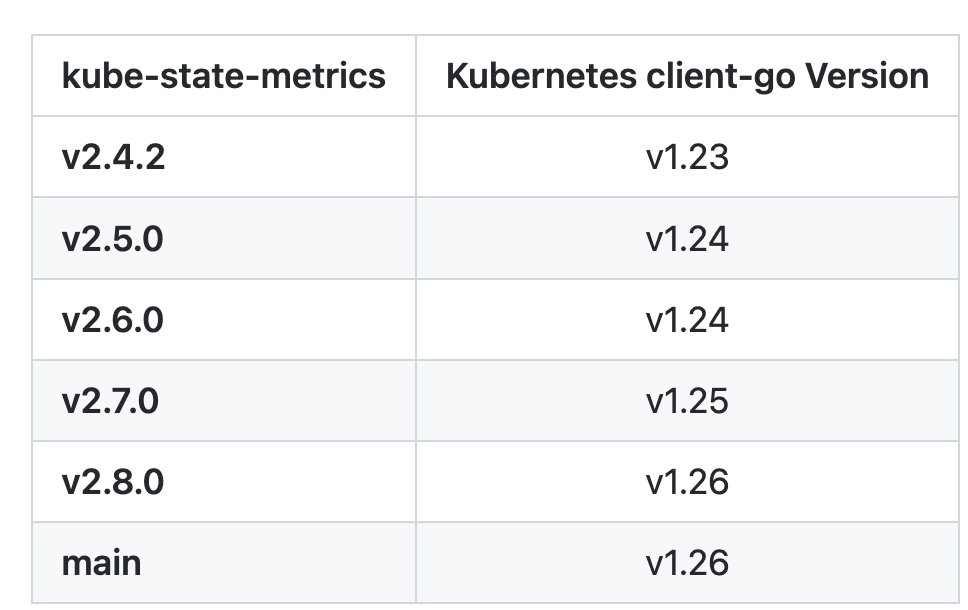

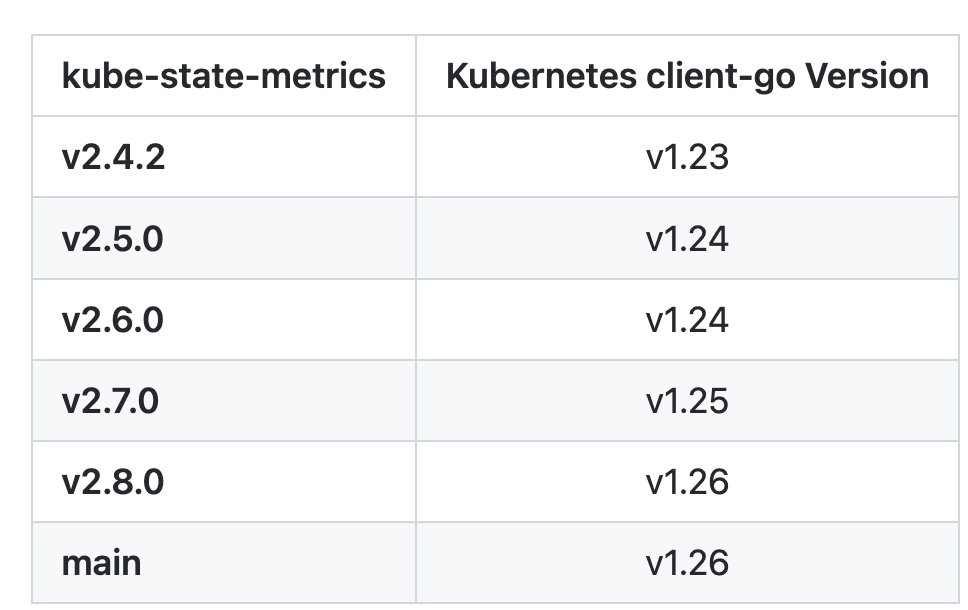

要安裝 KSM 也非常簡單,代碼倉庫中就包含了對應的資源清單文件,但是在安裝的時候記得要和你的 K8s 集群版本對應。

我這里的測試集群是 v1.25 版本的,所以我先切換到該分支:

$ git clone https://github.com/kubernetes/kube-state-metrics && cd kube-state-metrics

$ git checkout v2.7.0

$ kubectl apply -f examples/standard

該方式會以 Deployment 方式部署一個 KSM 實例:

$ kubectl get deploy -n kube-system kube-state-metrics

NAME READY UP-TO-DATE AVAILABLE AGE

kube-state-metrics 1/1 1 1 2m49s

$ kubectl get pods -n kube-system -l app.kubernetes.io/name=kube-state-metrics

NAME READY STATUS RESTARTS AGE

kube-state-metrics-548546fc89-zgkx5 1/1 Running 0 2m51s

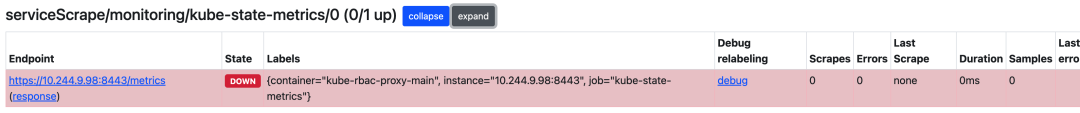

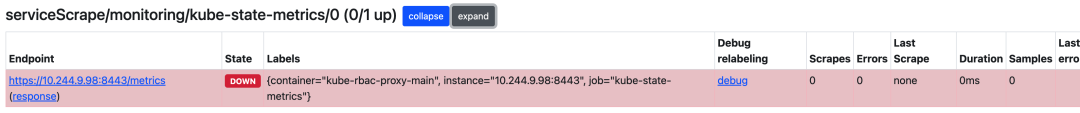

然后只需要讓 Prometheus 來發現 KSM 實例就可以了,當然有很多方式,比如可以通過添加注解來自動發現,也可以單獨為 KSM 創建一個獨立的 Job,如果使用的是 Prometheus Operator,也可以創建 ServiceMonitor 對象來獲取 KSM 指標數據。

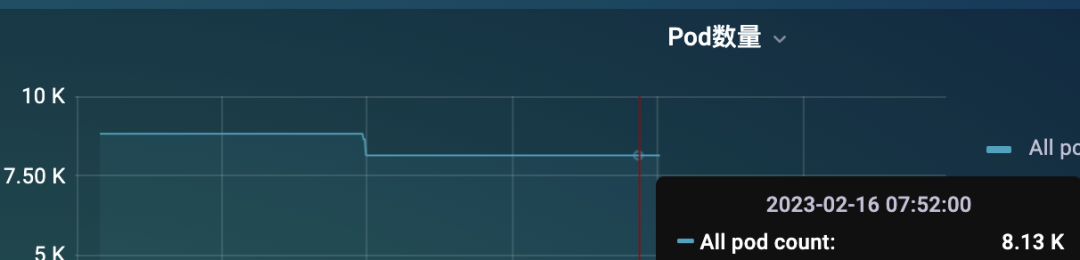

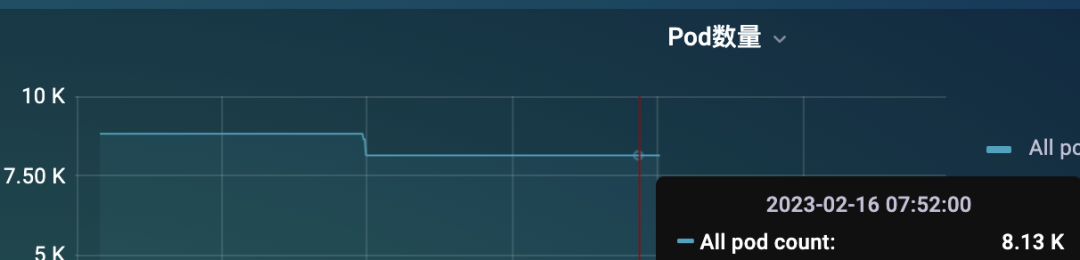

這種方式對于小規模集群是沒太大問題的,數據量不大,可以正常提供服務,只需要保證 KSM 高可用就可以在生產環境使用了。但是對于大規模的集群來說,就非常困難了,比如我們這里有一個 8K 左右 Pod 的集群,不算特別大。

但是只通過一個 KSM 實例來提供 metrics 指標還是非常吃力的,這個時候可能大部分情況下是獲取不到指標的,因為 metrics 接口里面的數據量太大了。

即使偶爾獲取到了,也需要話花很長時間,要知道我們會每隔 scrape_interval 的時間都會去訪問該指標接口的,可能前面一次請求還沒結束,下一次請求又發起了,要解決這個問題就得從 KSM 端入手解決,在 KSM 的啟動參數中我們可以配置過濾掉一些不需要的指標標簽:

$ kube-state-metrics -h

kube-state-metrics is a simple service that listens to the Kubernetes API server and generates metrics about the state of the objects.

Usage:

kube-state-metrics [flags]

kube-state-metrics [command]

Available Commands:

completion Generate completion script for kube-state-metrics.

help Help about any command

version Print version information.

Flags:

--add_dir_header If true, adds the file directory to the header of the log messages

--alsologtostderr log to standard error as well as files (no effect when -logtostderr=true)

--apiserver string The URL of the apiserver to use as a master

--config string Path to the kube-state-metrics options config file

--custom-resource-state-config string Inline Custom Resource State Metrics config YAML (experimental)

--custom-resource-state-config-file string Path to a Custom Resource State Metrics config file (experimental)

--custom-resource-state-only Only provide Custom Resource State metrics (experimental)

--enable-gzip-encoding Gzip responses when requested by clients via 'Accept-Encoding: gzip' header.

-h, --help Print Help text

--host string Host to expose metrics on. (default "::")

--kubeconfig string Absolute path to the kubeconfig file

--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)

--log_dir string If non-empty, write log files in this directory (no effect when -logtostderr=true)

--log_file string If non-empty, use this log file (no effect when -logtostderr=true)

--log_file_max_size uint Defines the maximum size a log file can grow to (no effect when -logtostderr=true). Unit is megabytes. If the value is 0, the maximum file size is unlimited. (default 1800)

--logtostderr log to standard error instead of files (default true)

--metric-allowlist string Comma-separated list of metrics to be exposed. This list comprises of exact metric names and/or regex patterns. The allowlist and denylist are mutually exclusive.

--metric-annotations-allowlist string Comma-separated list of Kubernetes annotations keys that will be used in the resource' labels metric. By default the metric contains only name and namespace labels. To include additional annotations provide a list of resource names in their plural form and Kubernetes annotation keys you would like to allow for them (Example: '=namespaces=[kubernetes.io/team,...],pods=[kubernetes.io/team],...)'. A single '*' can be provided per resource instead to allow any annotations, but that has severe performance implications (Example: '=pods=[*]').

--metric-denylist string Comma-separated list of metrics not to be enabled. This list comprises of exact metric names and/or regex patterns. The allowlist and denylist are mutually exclusive.

--metric-labels-allowlist string Comma-separated list of additional Kubernetes label keys that will be used in the resource' labels metric. By default the metric contains only name and namespace labels. To include additional labels provide a list of resource names in their plural form and Kubernetes label keys you would like to allow for them (Example: '=namespaces=[k8s-label-1,k8s-label-n,...],pods=[app],...)'. A single '*' can be provided per resource instead to allow any labels, but that has severe performance implications (Example: '=pods=[*]'). Additionally, an asterisk (*) can be provided as a key, which will resolve to all resources, i.e., assuming '--resources=deployments,pods', '=*=[*]' will resolve to '=deployments=[*],pods=[*]'.

--metric-opt-in-list string Comma-separated list of metrics which are opt-in and not enabled by default. This is in addition to the metric allow- and denylists

--namespaces string Comma-separated list of namespaces to be enabled. Defaults to ""

--namespaces-denylist string Comma-separated list of namespaces not to be enabled. If namespaces and namespaces-denylist are both set, only namespaces that are excluded in namespaces-denylist will be used.

--node string Name of the node that contains the kube-state-metrics pod. Most likely it should be passed via the downward API. This is used for daemonset sharding. Only available for resources (pod metrics) that support spec.nodeName fieldSelector. This is experimental.

--one_output If true, only write logs to their native severity level (vs also writing to each lower severity level; no effect when -logtostderr=true)

--pod string Name of the pod that contains the kube-state-metrics container. When set, it is expected that --pod and --pod-namespace are both set. Most likely this should be passed via the downward API. This is used for auto-detecting sharding. If set, this has preference over statically configured sharding. This is experimental, it may be removed without notice.

--pod-namespace string Name of the namespace of the pod specified by --pod. When set, it is expected that --pod and --pod-namespace are both set. Most likely this should be passed via the downward API. This is used for auto-detecting sharding. If set, this has preference over statically configured sharding. This is experimental, it may be removed without notice.

--port int Port to expose metrics on. (default 8080)

--resources string Comma-separated list of Resources to be enabled. Defaults to "certificatesigningrequests,configmaps,cronjobs,daemonsets,deployments,endpoints,horizontalpodautoscalers,ingresses,jobs,leases,limitranges,mutatingwebhookconfigurations,namespaces,networkpolicies,nodes,persistentvolumeclaims,persistentvolumes,poddisruptionbudgets,pods,replicasets,replicationcontrollers,resourcequotas,secrets,services,statefulsets,storageclasses,validatingwebhookconfigurations,volumeattachments"

--shard int32 The instances shard nominal (zero indexed) within the total number of shards. (default 0)

--skip_headers If true, avoid header prefixes in the log messages

--skip_log_headers If true, avoid headers when opening log files (no effect when -logtostderr=true)

--stderrthreshold severity logs at or above this threshold go to stderr when writing to files and stderr (no effect when -logtostderr=true or -alsologtostderr=false) (default 2)

--telemetry-host string Host to expose kube-state-metrics self metrics on. (default "::")

--telemetry-port int Port to expose kube-state-metrics self metrics on. (default 8081)

--tls-config string Path to the TLS configuration file

--total-shards int The total number of shards. Sharding is disabled when total shards is set to 1. (default 1)

--use-apiserver-cache Sets resourceVersinotallow=0 for ListWatch requests, using cached resources from the apiserver instead of an etcd quorum read.

-v, --v Level number for the log level verbosity

--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging

Use "kube-state-metrics [command] --help" for more information about a command.

可以通過 --metric-allowlist 或者 --metric-denylist 參數進行過濾。但是如果即使過濾了不需要的指標或標簽后指標接口數據仍然非常大又該怎么辦呢?

其實我們可以想象一下,無論怎么過濾,請求一次到達 metrics 接口后的數據量都是非常大的,這個時候是不是只能對指標數據進行拆分了,可以部署多個 KSM 實例,每個實例提供一部分接口數據,是不是就可以緩解壓力了,這其實就是我們常說的水平分片。為了水平分片 kube-state-metrics,它已經實現了一些自動分片功能,它是通過以下標志進行配置的:

- --shard (從 0 開始)

- --total-shards

分片是通過對 Kubernetes 對象的 UID 進行 MD5 哈希和對總分片數進行取模運算來完成的,每個分片決定是否由 kube-state-metrics 的相應實例處理對象。不過需要注意的是,kube-state-metrics 的所有實例,即使已經分片,也會處理所有對象的網絡流量和資源消耗,而不僅僅是他們負責那部分對象,要優化這個問題,Kubernetes API 需要支持分片的 list/watch 功能。在最理想的情況下,每個分片的內存消耗將比未分片設置少 1/n。通常,為了使 kube-state-metrics 能夠迅速返回其指標給 Prometheus,需要進行內存和延遲優化。減少 kube-state-metrics 和 kube-apiserver 之間的延遲的一種方法是使用 --use-apiserver-cache 標志運行 KSM,除了減少延遲,這個選項還將導致減少對 etcd 的負載,所以我們也是建議啟用該參數的。

使用了分片模式,則最好對分片相關指標進行監控,以確保分片設置符合預期,可以用下面兩個報警規則來進行報警:

- alert: KubeStateMetricsShardingMismatch

annotations:

description: kube-state-metrics pods are running with different --total-shards configuration, some Kubernetes objects may be exposed multiple times or not exposed at all.

summary: kube-state-metrics sharding is misconfigured.

expr: |

stdvar (kube_state_metrics_total_shards{job="kube-state-metrics"}) != 0

for: 15m

labels:

severity: critical

- alert: KubeStateMetricsShardsMissing

annotations:

description: kube-state-metrics shards are missing, some Kubernetes objects are not being exposed.

summary: kube-state-metrics shards are missing.

expr: |

2^max(kube_state_metrics_total_shards{job="kube-state-metrics"}) - 1

-

sum( 2 ^ max by (shard_ordinal) (kube_state_metrics_shard_ordinal{job="kube-state-metrics"}) )

!= 0

for: 15m

labels:

severity: critical

由于手動去配置分片可能會出現錯誤,所以 KSM 也提供了自動分片的功能,可以通過 StatefulSet 方式來部署多個副本的 KSM,自動分片允許每個分片在 StatefulSet 中部署時發現其實例位置,這對于自動配置分片非常有用。所以要啟用自動分片,必須通過 StatefulSet 運行 kube-state-metrics,并通過 --pod 和 --pod-namespace 標志將 pod 名稱和命名空間傳遞給 kube-state-metrics 進程,如下所示:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: kube-state-metrics

namespace: kube-system

spec:

replicas: 10

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

serviceName: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.8.0

spec:

automountServiceAccountToken: true

containers:

- args:

- --pod=$(POD_NAME)

- --pod-namespace=$(POD_NAMESPACE)

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.8.0

# ......

使用這種部署分片的方法,當你想要通過單個 Kubernetes 資源(在這種情況下為單個 StatefulSet)管理 KSM 分片時是很有用的,而不是每個分片都有一個 Deployment,這種優勢在部署大量分片時尤為顯著。

當然使用自動分片的部署方式也是有缺點的,主要是來自于 StatefulSet 支持的滾動升級策略,當由 StatefulSet 管理時,一個一個地替換 pod,當 pod 先被終止后,然后再重新創建,這樣的升級速度較慢,也可能會導致每個分片的短暫停機,如果在升級期間進行 Prometheus 抓取,則可能會錯過 kube-state-metrics 導出的某些指標。

自動分片功能的示例清單在 examples/autosharding 目錄中可以找到,可以直接通過下面的命令來部署:

$ kubectl apply -k examples/autosharding

上面的命令會以 StatefulSet 方式部署 2 個 KSM 實例:

$ kubectl get pods -n kube-system -l app.kubernetes.io/name=kube-state-metrics

NAME READY STATUS RESTARTS AGE

kube-state-metrics-0 1/1 Running 0 70m

kube-state-metrics-1 1/1 Running 0 65m

可以隨便查看一個 Pod 的日志:

$ kubectl logs -f kube-state-metrics-1 -nkube-system

I0216 05:53:23.151163 1 wrapper.go:78] Starting kube-state-metrics

I0216 05:53:23.154495 1 server.go:125] "Used default resources"

I0216 05:53:23.154923 1 types.go:184] "Using all namespaces"

I0216 05:53:23.155556 1 server.go:166] "Metric allow-denylisting" allowDenyStatus="Excluding the following lists that were on denylist: "

W0216 05:53:23.155792 1 client_config.go:617] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0216 05:53:23.178553 1 server.go:311] "Tested communication with server"

I0216 05:53:23.241024 1 server.go:316] "Run with Kubernetes cluster version" major="1" minor="25" gitVersinotallow="v1.25.3" gitTreeState="clean" gitCommit="434bfd82814af038ad94d62ebe59b133fcb50506" platform="linux/arm64"

I0216 05:53:23.241169 1 server.go:317] "Communication with server successful"

I0216 05:53:23.245132 1 server.go:263] "Started metrics server" metricsServerAddress="[::]:8080"

I0216 05:53:23.246148 1 metrics_handler.go:103] "Autosharding enabled with pod" pod="kube-system/kube-state-metrics-1"

I0216 05:53:23.246233 1 metrics_handler.go:104] "Auto detecting sharding settings"

I0216 05:53:23.246267 1 server.go:252] "Started kube-state-metrics self metrics server" telemetryAddress="[::]:8081"

I0216 05:53:23.253477 1 server.go:69] levelinfomsgListening onaddress[::]:8081

I0216 05:53:23.253477 1 server.go:69] levelinfomsgListening onaddress[::]:8080

I0216 05:53:23.253944 1 server.go:69] levelinfomsgTLS is disabled.http2falseaddress[::]:8080

I0216 05:53:23.254534 1 server.go:69] levelinfomsgTLS is disabled.http2falseaddress[::]:8081

I0216 05:53:23.297524 1 metrics_handler.go:80] "Configuring sharding of this instance to be shard index (zero-indexed) out of total shards" shard=1 totalShards=2

I0216 05:53:23.411710 1 builder.go:257] "Active resources" activeStoreNames="certificatesigningrequests,configmaps,cronjobs,daemonsets,deployments,endpoints,horizontalpodautoscalers,ingresses,jobs,leases,limitranges,mutatingwebhookconfigurations,namespaces,networkpolicies,nodes,persistentvolumeclaims,persistentvolumes,poddisruptionbudgets,pods,replicasets,replicationcontrollers,resourcequotas,secrets,services,statefulsets,storageclasses,validatingwebhookconfigurations,volumeattachments"

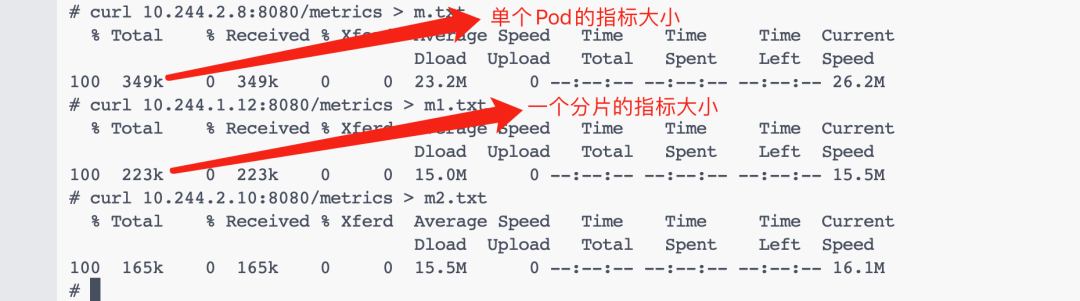

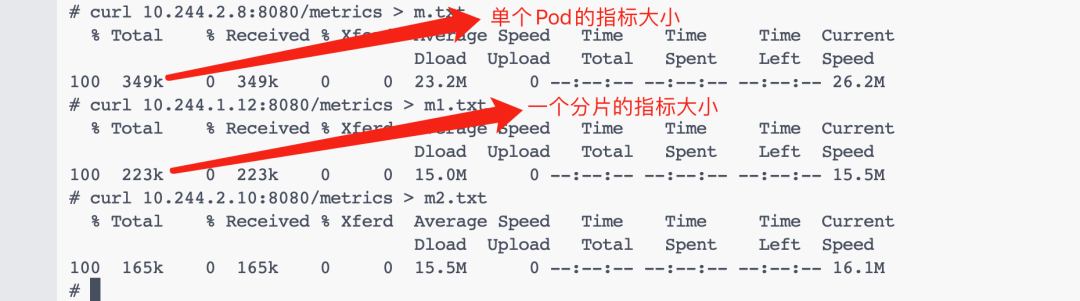

可以看到有類型 "Configuring sharding of this instance to be shard index (zero-indexed) out of total shards" shard=1 totalShards=2 這樣的日志信息,表面自動分片成功了。我們可以去分別獲取下分片的指標數據大小,并和未分片之前的進行對比,可以看到分片后的指標明顯減少了,如果單個實例的指標數據還是太大,則可以增加 StatefulSet 的副本數即可。

此外我們還可以單獨針對 pod 指標按照每個節點進行分片,只需要加上 --node 和 --resource 即可,這個時候我們直接使用一個 DaemonSet 來創建 KSM 實例即可,如下所示:

apiVersion: apps/v1

kind: DaemonSet

spec:

template:

spec:

containers:

- image: registry.k8s.io/kube-state-metrics/kube-state-metrics:IMAGE_TAG

name: kube-state-metrics

args:

- --resource=pods

- --node=$(NODE_NAME)

env:

- name: NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

對于其他的指標我們也可以使用 --resource 來單獨指定部署,也可以繼續使用分片的方式。總結來說就是對于大規模集群使用 kube-state-metrics 需要做很多優化:

- 過濾不需要的指標和標簽

- 通過分片降低 KSM 實例壓力

- 可以使用 DaemonSet 方式單獨針對 pod 指標進行部署

當然可能也有人會問,如果自己的業務指標也超級大的情況下該怎么辦呢?當然就得讓業務方來做支持了,首先要明確指標數據這么大是否正常?如果需求就是如此,那么也得想辦法能夠支持分片。