簡介

大型語言模型已經證明自己是一項革命性的技術。目前,人們已經開發出了許多基于大型語言模型功能的應用程序,而且預計很快還會有更多的應用程序問世。大型語言模型最有趣的應用之一是將其部署為智能助手,它們能夠幫助人類用戶完成各種任務。

人們已經能夠通過指令微調以及從人類的反饋中經強化學習訓練出聊天模型,而且這些模型已經在遵循人類指令和執行指定任務方面表現出非常有前景的功能。然而,這些模型在僅憑語言指令執行任務方面表現出非常有限的適用性。

多模式會話模型旨在釋放大型語言模型的力量,以解決需要將自然語言與其他模式相結合才能解決的問題。特別是,自從GPT-4V引入視覺功能以來,視覺語言模型受到了越來越多的關注。

通過圖像理解增強GPT-4的自然語言功能,人們開發出了一款功能強大的聊天助手,可以幫助用戶完成需要視覺和語言理解的任務。雖然GPT-4V的視覺能力令人印象深刻,但閉源模型限制了這項驚人技術的研究和實驗潛力。幸運的是,已有一些開源模型以一種易于訪問和透明的方式將視覺語言模型的力量帶到了社區中。這些模型還延續了日益關注計算和內存效率的趨勢,當然這也是開源大型語言模型已經出現的趨勢。這是一個非常重要的特征,因為它促進了這些模型的廣泛應用。

在本教程中,我將使用論文《可視化指令微調(Visual Instruction Tuning)》(https://arxiv.org/abs/2304.08485)中介紹的LLaVA(大型語言和視覺助手)模型來完成創建一個視覺聊天助手程序的過程。在討論使用官方存儲庫(https://github.com/haotian-liu/LLaVA)中提供的代碼實現視覺聊天助手的簡單代碼之前,我將首先簡要介紹LLaVA模型及其改進。然后,我將展示一些我精心制作的示例,以展示該模型的功能和局限性。

LLaVA模型

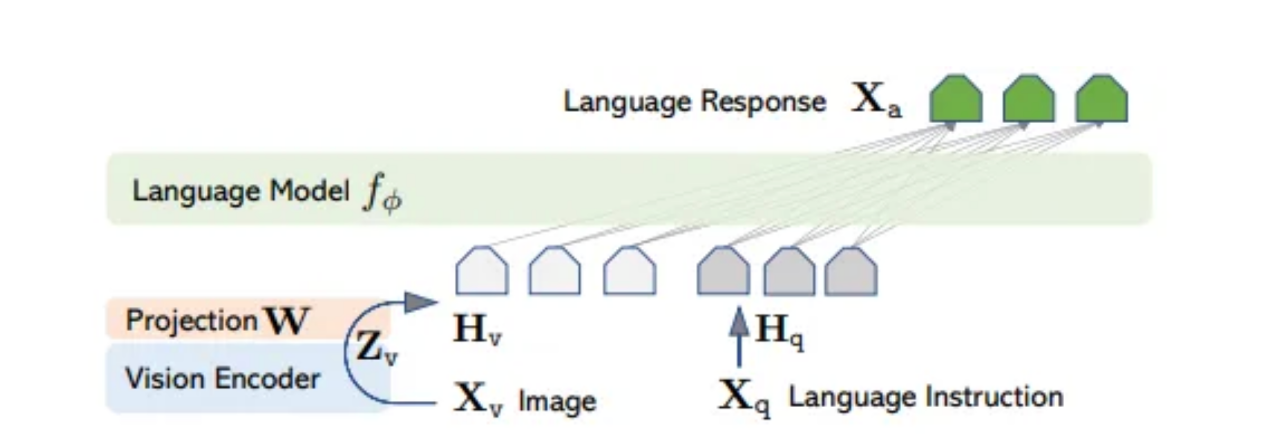

LLaVA模型是在上述論文《可視化指令微調(Visual Instruction Tuning)》中引入的,然后在論文《基于可視化指令微調的基準改進(Improved Baselines with Visual Instruction Tuning)》(地址:https://arxiv.org/abs/2310.03744,也稱為LLaVA-1.5模型)中得到進一步改進。其背后的思想是從圖像中提取視覺嵌入,并通過將其饋送到大型語言模型,可將其視為來自語言標記的嵌入。直觀地說,我們可以認為圖像將會使用“單詞”來描述——最初,這些單詞是語言模型用來生成答案的。為了選擇正確的“單詞”,模型需要使用預先訓練的CLIP視覺編碼器來提取視覺嵌入,然后將它們投影到語言模型的單詞嵌入空間中。后一種操作是用視覺語言連接器完成的,在第一篇論文《可視化指令微調》中,它最初被選擇為一個簡單的線性層,后來在論文《基于可視化指令微調的基準改進》中被一個更具表現力的多層感知器(MLP)所取代。該模型的體系結構如下所示:

LLaVA模型的體系架構圖

其中,投影W是LLaVA模型中的簡單線性層或者是LLaVA-1.5模型中的MLP。本圖像來自論文《可視化指令微調》。

該方法的優點之一是,通過引入預先訓練的視覺編碼器和預先訓練的語言模型,只有視覺語言連接器(這是一個輕量級模塊)必須從頭開始學習,其他部分則不需要。特別是,LLava模型的訓練僅包括兩個階段:

- 特征對齊的預訓練:預訓練的視覺編碼器和語言模型都被凍結,并且只有視覺語言連接器的權重被更新。所有訓練樣本都由文本圖像對組成,這些文本圖像對被打包成單回合對話。該階段旨在訓練視覺語言連接器,使視覺編碼器的嵌入與語言模型的文本嵌入對齊。

- 使用視覺指令進行微調:在這個階段,只有視覺編碼器的權重被固定,而視覺語言連接器和語言模型被微調在一起。該模型在基于圖像的指令執行任務后進行了微調。值得注意的是,這些數據中的一些是通過僅使用GPT4語言創建的,以便根據圖像的標題和所描繪的實體的邊界框的坐標創建指令跟隨樣本。

視覺聊天機器人的實現

使用官方存儲庫(https://github.com/haotian-liu/LLaVA)中提供的代碼創建視覺聊天機器人是相當容易的。另外,存儲庫還提供了標準化的聊天模板,可用于以正確的格式解析輸入。遵循訓練中使用的正確格式對于模型生成的答案的質量至關重要。當然,選擇恰當的模板取決于所使用的語言模型。基于預先訓練的Vicuna語言模型的LLaVA-1.5模型的模板如下所示:

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end> User's prompt

ASSISTANT: Assistant answer

USER: Another prompt前幾行是模型使用的一般系統提示。后面的幾個特殊標記<im_start>、<image>和<im_end>分別用于指示表示圖像的嵌入將被放置的位置。

然后,聊天機器人可以在一個簡單的Python類中定義。

class LLaVAChatBot:

def __init__(self,

model_path: str = 'liuhaotian/llava-v1.5-7b',

device_map: str = 'auto',

load_in_8_bit: bool = True,

**quant_kwargs) -> None:

self.model = None

self.tokenizer = None

self.image_processor = None

self.conv = None

self.conv_img = None

self.img_tensor = None

self.roles = None

self.stop_key = None

self.load_models(model_path,

device_map=device_map,

load_in_8_bit=load_in_8_bit,

**quant_kwargs)

def load_models(self, model_path: str,

device_map: str,

load_in_8_bit: bool,

**quant_kwargs) -> None:

"""Load the model, processor and tokenizer."""

quant_cfg = BitsAndBytesConfig(**quant_kwargs)

self.model = LlavaLlamaForCausalLM.from_pretrained(model_path,

low_cpu_mem_usage=True,

device_map=device_map,

load_in_8bit=load_in_8_bit,

quantization_config=quant_cfg)

self.tokenizer = AutoTokenizer.from_pretrained(model_path,

use_fast=False)

vision_tower = self.model.get_vision_tower()

vision_tower.load_model()

vision_tower.to(device='cuda')

self.image_processor = vision_tower.image_processor

disable_torch_init()

def setup_image(self, img_path: str) -> None:

"""Load and process the image."""

if img_path.startswith('http') or img_path.startswith('https'):

response = requests.get(img_path)

self.conv_img = Image.open(BytesIO(response.content)).convert('RGB')

else:

self.conv_img = Image.open(img_path).convert('RGB')

self.img_tensor = self.image_processor.preprocess(self.conv_img,

return_tensors='pt'

)['pixel_values'].half().cuda()

def generate_answer(self, **kwargs) -> str:

"""Generate an answer from the current conversation."""

raw_prompt = self.conv.get_prompt()

input_ids = tokenizer_image_token(raw_prompt,

self.tokenizer,

IMAGE_TOKEN_INDEX,

return_tensors='pt').unsqueeze(0).cuda()

stopping = KeywordsStoppingCriteria([self.stop_key],

self.tokenizer,

input_ids)

with torch.inference_mode():

output_ids = self.model.generate(input_ids,

images=self.img_tensor,

stopping_criteria=[stopping],

**kwargs)

outputs = self.tokenizer.decode(

output_ids[0, input_ids.shape[1]:]

).strip()

self.conv.messages[-1][-1] = outputs

return outputs.rsplit('</s>', 1)[0]

def get_conv_text(self) -> str:

"""Return full conversation text."""

return self.conv.get_prompt()

def start_new_chat(self,

img_path: str,

prompt: str,

do_sample=True,

temperature=0.2,

max_new_tokens=1024,

use_cache=True,

**kwargs) -> str:

"""Start a new chat with a new image."""

conv_mode = "v1"

self.setup_image(img_path)

self.conv = conv_templates[conv_mode].copy()

self.roles = self.conv.roles

first_input = (DEFAULT_IM_START_TOKEN + DEFAULT_IMAGE_TOKEN +

DEFAULT_IM_END_TOKEN + '\n' + prompt) # f"{self.roles[0]}: {prompt}")

self.conv.append_message(self.roles[0], first_input)

self.conv.append_message(self.roles[1], None)

if self.conv.sep_style == SeparatorStyle.TWO:

self.stop_key = self.conv.sep2

else:

self.stop_key = self.conv.sep

answer = self.generate_answer(do_sample=do_sample,

temperature=temperature,

max_new_tokens=max_new_tokens,

use_cache=use_cache,

**kwargs)

return answer

def continue_chat(self,

prompt: str,

do_sample=True,

temperature=0.2,

max_new_tokens=1024,

use_cache=True,

**kwargs) -> str:

"""Continue the existing chat."""

if self.conv is None:

raise RuntimeError("No existing conversation found. Start a new"

"conversation using the `start_new_chat` method.")

self.conv.append_message(self.roles[0], prompt)

self.conv.append_message(self.roles[1], None)

answer = self.generate_answer(do_sample=do_sample,

temperature=temperature,

max_new_tokens=max_new_tokens,

use_cache=use_cache,

**kwargs)

return answer如果你熟悉Transformers庫(https://github.com/huggingface/transformers)的話,你會認識其中許多常見的功能,并且執行的操作應該也很容易理解。讓我們快速回顧一下上面定義的LLaVAChatBot類的方法。

- load_models:此方法使用指定的參數加載語言模型、標記器和圖像處理器,以便使用BitsAndBytes庫進行量化。該代碼隱藏了“Hugging Face”轉換器模型使用的from_pretrained方法。BitsAndBytes允許量化為8位或4位,以減少GPU內存需求。

- setup_image:這個方法實現從本地路徑或URL加載圖像,并使用圖像處理器將其轉換為張量。

- generate_answer:此方法返回模型的答案,繼續當前關于所提供圖像的對話。LLaVa模型的生成方法類似于Hugging Face轉換模型的生成方式。

- get_conv_text:此方法返回到目前為止對話的原始文本。

- start_new_chat:這是聊天機器人的兩種主要方法之一,用于與模型開始新的聊天。它在給定圖像和傳遞給模型的初始提示的情況下創建一個新的對話。它按照上一節中討論的格式,使用存儲庫中定義的模板來設置對話。

- continue_chat:這是另一個主要方法,它負責繼續關于圖像的現有對話。

我已經在Colab筆記本(https://colab.research.google.com/drive/1tq9K3utBJ4VeMmuUarMEFCmaekMUG_Zd?usp=sharing)中提供了完整的代碼,所以感興趣的讀者可以自己嘗試一下。

測試示例

在本節中,我收集了一些在測試模型功能時獲得的有趣示例。本文中的所有示例都是使用具有8位量化的模型llava-v1.5–7b模型創建的。

首先,讓我們看看模型描述和其理解圖像的能力。下面我請模型描述一張白虎的照片。

Joshua J. Cotten在Unsplash上拍攝的照片

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

Describe the image in details.

ASSISTANT: The image features a white tiger laying down on a rocky surface,

possibly a stone wall or a rock. The tiger appears to be relaxed and

comfortable in its environment. The tiger's position is such that it covers a

significant portion of the image, from the left side to almost the entire right

side. The rocky surface provides a natural and rugged backdrop for the scene.</s></s>

USER: What is unique about this tiger?

ASSISTANT: This tiger is unique because it is a white tiger, which is an

extremely rare and visually striking variation of the species. White tigers are

born with a genetic mutation that causes their fur to be predominantly white,

with the rest of their body being a mix of orange and black stripes. This

distinctive appearance sets them apart from the more common orange and black

striped tigers.</s></s>該模型似乎能夠詳細描述圖像,也能夠推理圖像的主題,正確地評估白虎還是非常罕見的。

接下來,我測試了該模型是否能夠為用戶提供執行各種任務的可操作指令。這就是“字幕”模式與真正的視覺聊天助手的區別。例如,模型能否根據圖像中的成分提供食譜呢?結果報告如下:

ThermoPro在Unsplash上拍攝的照片

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

What can I cook with these ingredients?

ASSISTANT: With the ingredients displayed on the table, you can prepare a

delicious roast dinner. The meat, which is a large piece of beef, can be

seasoned and cooked in the oven, while the vegetables, including carrots,

potatoes, and onions, can be roasted alongside the meat. You can also prepare a

side dish with the potatoes, carrots, and onions, which can be served alongside

the roast dinner. The presence of a bowl of sauce suggests that you can use it

as a dipping sauce or to enhance the flavors of the roast and vegetables.

Overall, this combination of ingredients offers a hearty and flavorful meal

that can be enjoyed with family and friends.</s></s>

USER: Could the recipe you suggested be included in a vegetarian diet?

ASSISTANT: The recipe I suggested, which involves roasting a large piece of

beef and vegetables, is not suitable for a vegetarian diet. The beef is the

main protein source in the dish, and it is not suitable for vegetarians who

avoid consuming meat. However, you can still create a vegetarian-friendly meal

using the vegetables and sauce availableon the table. For example, you can

prepare a vegetable roast with the carrots, potatoes, and onions, and serve it

alongside a side of mashed potatoes or a vegetable-based sauce. This way, you

can still enjoy the flavors and textures of the dish without including meat.</s></s>

USER: Can you suggest a vegetarian recipe with minimal modifications of the

depicted ingredients?

ASSISTANT: Certainly! Here's a vegetarian recipe that can be made using the

ingredients available on the table:

Roasted Vegetable Medley with Sauce

Ingredients:

* Carrots

* Potatoes

* Onions

* Olive oil

* Salt

* Pepper

* Herbs (such as thyme, rosemary, or parsley)

* Sauce (optional)

Instructions:

1. Preheat your oven to 400°F (200°C).

2. Wash and peel the carrots, potatoes, and onions. Cut them into bite-sized

pieces.

3. In a large bowl, toss the vegetables with olive oil, salt, and pepper.

4. Spread the vegetables evenly on a baking sheet lined with parchment paper.

5. Roast the vegetables in the preheated oven for 20-25 minutes, or until they

are tender and slightly browned.

6. If desired, prepare a sauce by combining ingredients such as tomatoes,

garlic, and herbs. You can use this sauce as a dipping sauce or to enhance the

flavors of the roasted vegetables.

7. Serve the roasted vegetable medley alongside a side of mashed potatoes or a

vegetable-based sauce.

This vegetarian recipe allows you to enjoy the flavors and textures of the

roasted vegetables without including meat. The sauce can be easily customized

to suit your taste preferences, and the dish can be served alongside a variety

of side dishes, such as mashed potatoes or a vegetable-based sauce.</s></s>該模型似乎對圖像中的成分有很好的理解,并且能夠將單個單詞與更抽象的概念聯系起來(即,有肉的飯不是素食)。

現在,讓我們看看如何使用該模型來獲得建議。這是一個在頭腦風暴時獲得新想法的有用功能,可以為用戶提供真正的價值。在下面的對話中,我向模型征求了改變咖啡館外觀的建議。

Micha?Mancewicz在Unsplash上拍攝的照片

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

How can I renovate this café to make it look more vintage?

ASSISTANT: To make the café look more vintage, you can consider the following

changes:

1. Update the lighting: Replace the current lighting with vintage-style lamps

or lanterns, which will create a warm and cozy atmosphere.

2. Add vintage decorations: Incorporate antique items, such as vintage clocks,

artwork, or decorative plates, to create a nostalgic ambiance.

3. Use vintage furniture: Replace the current stools with vintage-style chairs

or barstools, which can add character and charm to the space.

4. Incorporate greenery: Add more greenery, such as potted plants or vines, to

create a lush and inviting atmosphere.

5. Update the bar: Replace the current bar with a vintage-style bar, complete

with a vintage-inspired backsplash and countertop.

6. Use vintage-style glassware: Replace the current glassware with vintage-style

glasses, such as wine glasses, beer mugs, or cocktail shakers.

7. Add vintage-style signage: Replace the current signage with vintage-style

menu boards or chalkboards, which can add a touch of nostalgia to the space.

8. Use vintage-style wallpaper or paint: Apply a vintage-style wallpaper or

paint to the walls, which can create a unique and charming atmosphere.

By implementing these changes, the café will appear more vintage and inviting,

creating a memorable experience for its patrons.</s></s>該建議看起來很中肯,盡管相當籠統,但確定了當前可以改變的元素,以賦予更復古的風格。

讓我們繼續探討一些能夠幫助用戶完成日常活動的實用的內容。該模型可以幫助撰寫廣告或列表嗎?

Eugene Chystiakov在Unsplash上拍攝的照片

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

Write an advertisment for this chair

ASSISTANT: Introducing the modern and stylish black and wood chair, perfect for

any contemporary setting. This chair features a sleek black seat and a wooden

base, creating a striking contrast against any background. Its minimalist

design makes it a versatile addition to any room, whether it's used as a dining

chair, a side table, or a statement piece in a living space. The chair's sturdy

construction ensures it can withstand daily use, while its clean lines and

sleek appearance add a touch of sophistication to any room. Upgrade your living

space with this stylish and functional chair today.</s></s>

USER: Write now an advertisement for the same chair but targeting cafes

ASSISTANT: Attention café owners and design enthusiasts! Introducing the black

and wood chair, the perfect addition to your café's seating area. This stylish

and functional chair offers a modern touch to your establishment, with its

sleek black seat and wooden base creating a striking contrast against any

background. Its minimalist design makes it a versatile choice for any café

setting, whether it's used as a dining chair, a side table, or a statement

piece in a lounge area. The chair's sturdy construction ensures it can

withstand daily use, while its clean lines and sleek appearance add a touch of

sophistication to any room. Upgrade your café's ambiance with this stylish and

functional chair today.</s></s>該模型能夠正確地定位椅子的特征,并創建一個有吸引力的廣告,而且可以根據要求并針對不同的目標正確地定制答案。

作為最后一個測試,讓我們看看已經證明對大型語言模型有益的提示技術是否也可以與LLaVA模型一起使用。下面是一個嘗試使用零樣本思維鏈提示的例子。

Arnold Middelkoop在Unsplash上拍攝的照片

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

Would the fish depicted in the image float if thrown in water? Think step by

step.

ASSISTANT: The fish depicted in the image is a large white fish sculpture or

statue, which is lying on the ground on a brick walkway near a body of water.

If thrown into the water, the fish would not float because it is a solid,

inanimate object. It would sink to the bottom of the water, as it is not

buoyant due to its composition and size. The fish's position on the ground and

its lack of buoyancy make it impossible for it to float in water.</s></s>

USER: What material could be used to make a fish sculpture that floats on

water?

ASSISTANT: To make a fish sculpture that floats on water, one could use a

lightweight, buoyant material such as expanded polystyrene foam or polyethylene

foam. These materials are commonly used for making buoyant objects like life

jackets, flotation devices, and fishing floats. By shaping the foam into the

desired fish form, it would be able to float on the water's surface,

simulating the behavior of a real fish.</s></s>該模型能夠解釋為什么魚不會漂浮,正確地識別出它不是真正的魚。此外,模型還遵循指示,在給出最終答案之前循序漸進地思考。為了進行比較,下面給出一個沒有“一步一步思考(Think step by step)”提示的對話示例。

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

Would the fish depicted in the image float if thrown in water?

ASSISTANT: No, the fish depicted in the image would not float if thrown in

water. It is a large, white, stone fish sculpture, which is not a real fish

and does not have the buoyancy properties of a natural fish.</s></s>雖然最后的答案仍然是正確的,但在這種情況下給出的解釋要詳細得多,答案是在句子的開頭給出的。由于LLaVA使用的生成語言模型是因果關系的,這意味著所提供的答案不依賴于隨后的解釋。進行更廣泛的實驗來測試思維鏈(CoT)是否能夠在復雜的推理任務中提高視覺語言模型的性能是一件有趣的事情,這非常類似于在論文《Chain-of-Thought Prompting Elicits Reasoning in Large Language Models 》(https://arxiv.org/abs/2201.11903)和《Large Language Models are Zero-Shot Reasoners》(https://arxiv.org/abs/2205.11916)中觀測的大型語言模型。

局限性

LLaVA模型的功能雖然非常強大,但也有其局限性。例如,該模型已被訓練為每次聊天只使用一個圖像,因此它無法處理需要與多個圖像交互的更復雜的對話。這種限制并不是模型架構固有的,可以通過在訓練期間包括多圖像聊天數據來進行修改。

在測試過程中,我注意到該模型(至少是1.57b模型的8位量化版本)在計算圖像中的元素以及處理數字任務方面存在問題。下面是一個例子。

John Matychuk在Unsplash上拍攝的照片

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

How many car are inside the parking lot?

ASSISTANT: There are several cars parked inside the parking lot.</s></s>

USER: List the numbers of the parking spots occupied by cars

ASSISTANT: The parking spots occupied by cars are 66, 67, 68, 69, 70, and 71.</s></s>與所有大型語言模型一樣,該模型也容易產生“幻覺”,使用適當的提示很容易出錯。例如,如果提供一張沙漠的照片,并詢問圖像中的魚是什么物種,就會產生沙漠魚的幻覺。

Wolfgang Hasselmann在Unsplash上拍攝的照片

A chat between a curious user and an artificial intelligence assistant. The

assistant gives helpful, detailed, and polite answers to the user's questions.

USER: <im_start><image><im_end>

Tell me the species of the fish in this image

ASSISTANT: The fish in this image is a desert fish, which is a species adapted

to living in desert environments.</s></s>結論

總之,LLaVA模型已經在視覺語言理解方面表現出令人印象深刻的能力。它標志著多模式開源視覺語言模型向前邁出了明確的一步。LLaVA模型最大的優點之一是它是輕量級的,易于訓練和微調。例如,LLaVA 1.5 13b的完整訓練只需要120萬個數據,在單個8-A100節點上大約需要1天時間。這使得它適合在特定領域進行微調,來擔當一個專家助理——正如在論文《LLaVA-Med: Training a Large Language-and-Vision Assistant for Biomedicine in One Day》(LLaVA Med:在一天內為生物醫學訓練大型語言和視覺助理)((https://arxiv.org/abs/2306.00890))中所做的那樣。

在聊天助手中添加視覺功能擴展了此類模型的應用領域,將其革命性的潛力帶到了更復雜、更細致的任務中。將圖像特征視為語言標記也帶來了使用純文本語言模型中使用的所有高級提示技術的可能性,并作出進一步的擴展。例如,可以通過檢索與對話相關的文本和圖像來擴展檢索增強生成的功能。事實上,使用CLIP的共享圖像-文本嵌入空間,可以從輸入文本或圖片開始檢索外部文檔和外部圖像!

論文《LLaVA Interactive:An All-in-One Demo for Image Chat,Segmentation,Generation and Editing》(https://arxiv.org/abs/2311.00571)中介紹了擴展該模型功能的另一個有趣方向。其主要思想是將視覺語言聊天模型、文本到圖像生成模型和其他視覺模型(如圖像分割模型)的各種功能相結合,以獲得能夠處理多模式輸入和生成多模式輸出的助手。

總之,LLaVA模型標志著開源多模態生成模型邁出了重要的一步,這些模型表現出了令人印象深刻的能力,并吸引了很多人的興趣。隨著開源模型的廣泛采用,我相信我們很快就會看到基于這些強大模型的新應用程序迅速增加。

最后,感謝你的閱讀!如果你想自己試用一下代碼,你可以看看這個Colab筆記本(https://colab.research.google.com/drive/1tq9K3utBJ4VeMmuUarMEFCmaekMUG_Zd?usp=sharing)。

譯者介紹

朱先忠,51CTO社區編輯,51CTO專家博客、講師,濰坊一所高校計算機教師,自由編程界老兵一枚。

原文標題:Create your Vision Chat Assistant with LLaVA,作者:Gabriele Sgroi

鏈接:https://towardsdatascience.com/create-your-vision-chat-assistant-with-llava-610b02c3283e。