機(jī)器學(xué)習(xí)新寵:對比學(xué)習(xí)論文實(shí)現(xiàn)大合集,60多篇分門別類,從未如此全面

大家好,我是對白。

最近對比學(xué)習(xí)真的太火了,已然成為各大頂會(huì)爭相投稿的一個(gè)熱門領(lǐng)域,而它火的原因也很簡單,就是因?yàn)樗鉀Q了有監(jiān)督訓(xùn)練標(biāo)注數(shù)據(jù)有限這個(gè)典型問題(這個(gè)問題在工業(yè)界非常滴常見)。所以對比學(xué)習(xí)的出現(xiàn),給CV、NLP和推薦都帶來了極大的福音,具體來說:

1、在CV領(lǐng)域,解決了“在沒有更大標(biāo)注數(shù)據(jù)集的情況下,如何采用自監(jiān)督預(yù)訓(xùn)練模式,來從中吸取圖像本身的先驗(yàn)知識分布,得到一個(gè)預(yù)訓(xùn)練模型”的問題;

2、在NLP領(lǐng)域,驗(yàn)證了”自監(jiān)督預(yù)訓(xùn)練使用的數(shù)據(jù)量越大,模型越復(fù)雜,那么模型能夠吸收的知識越多,對下游任務(wù)效果來說越好“這樣一個(gè)客觀事實(shí);

3、在推薦領(lǐng)域,解決了以下四個(gè)原因:數(shù)據(jù)的稀疏性、Item的長尾分布、跨域推薦中多個(gè)不同的view聚合問題以及增加模型的魯棒性或?qū)乖胍簦信d趣地可以看我寫的這篇文章:推薦系統(tǒng)中不得不學(xué)的對比學(xué)習(xí)(Contrastive Learning)方法

因此為了更加清楚的掌握對比學(xué)習(xí)的前沿方向與最新進(jìn)展,我為大家整理了最近一年來各大頂會(huì)中對比學(xué)習(xí)相關(guān)的論文,一共涵蓋:ICLR2021,SIGIR2021,WWW2021,CVPR2021,AAAI2021,NAACL2021,ICLR2020,NIPS2020,CVPR2020,ICML2020,KDD2020共十一個(gè)會(huì)議60多篇論文。本次整理以long paper和research paper為主,也包含少量的short paper和industry paper。

本文整理的論文列表已經(jīng)同步更新到GitHub,GitHub上會(huì)持續(xù)更新頂會(huì)論文,歡迎大家關(guān)注和star~

https://github.com/coder-duibai/Contrastive-Learning-Papers-Codes

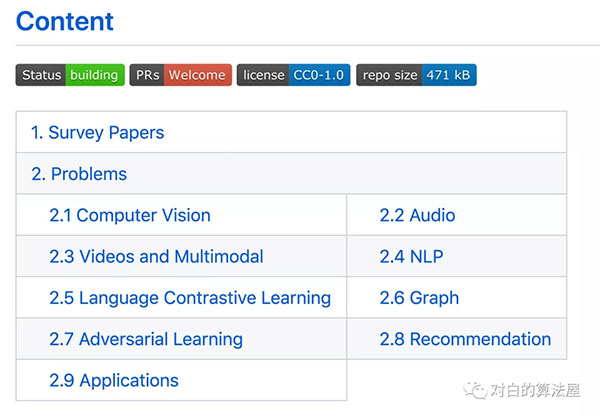

分成九類

Awesome Contrastive Learning Papers&Codes。

我將60多篇論文和它們的代碼,分到了九個(gè)類別里。

一、Computer Vision

第一類是計(jì)算機(jī)視覺,也是內(nèi)容最飽滿的章節(jié),有19篇論文的代碼。

不乏最近非常著名的模型,例如何愷明提出的MoCo和MoCo v2以及Geoffrey Hinton提出的SimCLR和SimCLR v2便屬于這一類。

1. [PCL] Prototypical Contrastive Learning of Unsupervised Representations.ICLR2021. Authors:Junnan Li, Pan Zhou, Caiming Xiong, Steven C.H. Hoi. paper code

2. [BalFeat] Exploring Balanced Feature Spaces for Representation Learning. ICLR2021.

Authors:Bingyi Kang, Yu Li, Sa Xie, Zehuan Yuan, Jiashi Feng. paper

3. [MiCE] MiCE: Mixture of Contrastive Experts for Unsupervised Image Clustering. ICLR2021. Authors:Tsung Wei Tsai, Chongxuan Li, Jun Zhu. paper code

4. [i-Mix] i-Mix: A Strategy for Regularizing Contrastive Representation Learning. ICLR2021.

Authors:Kibok Lee, Yian Zhu, Kihyuk Sohn, Chun-Liang Li, Jinwoo Shin, Honglak Lee. paper code

5. Contrastive Learning with Hard Negative Samples.ICLR2021.

Authors:Joshua Robinson, Ching-Yao Chuang, Suvrit Sra, Stefanie Jegelka. paper code

6. [LooC] What Should Not Be Contrastive in Contrastive Learning. ICLR2021.

Authors:Tete Xiao, Xiaolong Wang, Alexei A. Efros, Trevor Darrell. paper

7. [MoCo] Momentum Contrast for Unsupervised Visual Representation Learning. CVPR2020.

Authors:Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, Ross Girshick. paper code

8. [MoCo v2] Improved Baselines with Momentum Contrastive Learning.

Authors:Xinlei Chen, Haoqi Fan, Ross Girshick, Kaiming He. paper code

9. [SimCLR] A Simple Framework for Contrastive Learning of Visual Representations. ICML2020. Authors:Ting Chen, Simon Kornblith, Mohammad Norouzi, Geoffrey Hinton. paper code

10. [SimCLR v2] Big Self-Supervised Models are Strong Semi-Supervised Learners. NIPS2020.

Authors:Ting Chen, Simon Kornblith, Kevin Swersky, Mohammad Norouzi, Geoffrey Hinton. paper code

11. [BYOL] Bootstrap your own latent: A new approach to self-supervised Learning.

Authors:Jean-Bastien Grill, Florian Strub, Florent Altché, Corentin Tallec, Pierre H, etc.

12. [SwAV] Unsupervised Learning of Visual Features by Contrasting Cluster Assignments. NIPS2020. Authors:Mathilde Caron, Ishan Misra, Julien Mairal, Priya Goyal, Piotr Bojanowski, Armand Joulin. paper code

13. [SimSiam] Exploring Simple Siamese Representation Learning. CVPR2021.

Authors:Xinlei Chen, Kaiming He. paper code

14. Hard Negative Mixing for Contrastive Learning. NIPS2020.

Authors:Yannis Kalantidis, Mert Bulent Sariyildiz, Noe Pion, Philippe Weinzaepfel, Diane Larlus. paper

15. Supervised Contrastive Learning. NIPS2020. Authors:Prannay Khosla, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, Dilip Krishnan. paper

16. [LoCo] LoCo: Local Contrastive Representation Learning. NIPS2020.

Authors:Yuwen Xiong, Mengye Ren, Raquel Urtasun. paper

17. What Makes for Good Views for Contrastive Learning?. NIPS2020.

Authors:Yonglong Tian, Chen Sun, Ben Poole, Dilip Krishnan, Cordelia Schmid, Phillip Isola. paper

18. [ContraGAN] ContraGAN: Contrastive Learning for Conditional Image Generation. NIPS2020.

Authors:Minguk Kang, Jaesik Park. paper code

19. [SpCL] Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID. NIPS2020.

Authors:Yixiao Ge, Feng Zhu, Dapeng Chen, Rui Zhao, Hongsheng Li. paper code

二、Audio

第二類是音頻,有1篇論文,wav2vec 2.0

1. wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations.

Authors:Alexei Baevski, Henry Zhou, Abdelrahman Mohamed, Michael Auli. paper code

三、Videos and Multimodal

第三類是視頻和多模態(tài),主要包含ICLR2021和NIPS2020的論文,包含少量CVPR2020,有12篇論文的實(shí)現(xiàn)。

1. Time-Contrastive Networks: Self-Supervised Learning from Video.

Authors: Pierre Sermanet; Corey Lynch; Yevgen Chebotar; Jasmine Hsu; Eric Jang; Stefan Schaal; Sergey Levine. paper

2. Contrastive Multiview Coding.

Authors:Yonglong Tian, Dilip Krishnan, Phillip Isola. paper code

3. Learning Video Representations using Contrastive Bidirectional Transformer.

Authors:Chen Sun, Fabien Baradel, Kevin Murphy, Cordelia Schmid. paper

4. End-to-End Learning of Visual Representations from Uncurated Instructional Videos.CVPR2020.

Authors:Antoine Miech, Jean-Baptiste Alayrac, Lucas Smaira, Ivan Laptev, Josef Sivic, Andrew Zisserman. paper code

5. Multi-modal Self-Supervision from Generalized Data Transformations.

Authors:Mandela Patrick, Yuki M. Asano, Polina Kuznetsova, Ruth Fong, João F. Henriques, Geoffrey Zweig, Andrea Vedaldi. paper

6. Support-set bottlenecks for video-text representation learning. ICLR2021.

Authors:Mandela Patrick, Po-Yao Huang, Yuki Asano, Florian Metze, Alexander Hauptmann, João Henriques, Andrea Vedaldi. paper

7. Contrastive Learning of Medical Visual Representations from Paired Images and Text. ICLR2021.

Authors:Yuhao Zhang, Hang Jiang, Yasuhide Miura, Christopher D. Manning, Curtis P. Langlotz. paper

8. AVLnet: Learning Audio-Visual Language Representations from Instructional Videos.

Authors:Andrew Rouditchenko, Angie Boggust, David Harwath, Brian Chen, Dhiraj Joshi, Samuel Thomas, Kartik Audhkhasi, Hilde Kuehne, Rameswar Panda, Rogerio Feris, Brian Kingsbury, Michael Picheny, Antonio Torralba, James Glass. paper

9. Self-Supervised MultiModal Versatile Networks. NIPS2020.

Authors:Jean-Baptiste Alayrac, Adrià Recasens, Rosalia Schneider, Relja Arandjelović, Jason Ramapuram, Jeffrey De Fauw, Lucas Smaira, Sander Dieleman, Andrew Zisserman. paper

10. Memory-augmented Dense Predictive Coding for Video Representation Learning.

Authors:Tengda Han, Weidi Xie, Andrew Zisserman. paper code

11. Spatiotemporal Contrastive Video Representation Learning.

Authors:Rui Qian, Tianjian Meng, Boqing Gong, Ming-Hsuan Yang, Huisheng Wang, Serge Belongie, Yin Cui. paper code

12. Self-supervised Co-training for Video Representation Learning. NIPS2020.

Authors:Tengda Han, Weidi Xie, Andrew Zisserman. paper

四、NLP

第四類是自然語言處理,主要包含ICLR2021和NAACL2021的論文,有14項(xiàng)研究的實(shí)現(xiàn)。

1. [CALM] Pre-training Text-to-Text Transformers for Concept-centric Common Sense. ICLR2021. Authors:Wangchunshu Zhou, Dong-Ho Lee, Ravi Kiran Selvam, Seyeon Lee, Xiang Ren. papercode

2. Residual Energy-Based Models for Text Generation. ICLR2021.

Authors:Yuntian Deng, Anton Bakhtin, Myle Ott, Arthur Szlam, Marc'Aurelio Ranzato. paper

3. Contrastive Learning with Adversarial Perturbations for Conditional Text Generation. ICLR2021.

Authors:Seanie Lee, Dong Bok Lee, Sung Ju Hwang. paper

4. [CoDA] CoDA: Contrast-enhanced and Diversity-promoting Data Augmentation for Natural Language Understanding. ICLR2021.

Authors:Yanru Qu, Dinghan Shen, Yelong Shen, Sandra Sajeev, Jiawei Han, Weizhu Chen. paper

5. [FairFil] FairFil: Contrastive Neural Debiasing Method for Pretrained Text Encoders. ICLR2021.

Authors:Pengyu Cheng, Weituo Hao, Siyang Yuan, Shijing Si, Lawrence Carin. paper

6. Towards Robust and Efficient Contrastive Textual Representation Learning. ICLR2021.

Authors:Liqun Chen, Yizhe Zhang, Dianqi Li, Chenyang Tao, Dong Wang, Lawrence Carin. paper

7. Self-supervised Contrastive Zero to Few-shot Learning from Small, Long-tailed Text data. ICLR2021.

Authors:Nils Rethmeier, Isabelle Augenstein. paper

8. Approximate Nearest Neighbor Negative Contrastive Learning for Dense Text Retrieval. ICLR2021.

Authors:Lee Xiong, Chenyan Xiong, Ye Li, Kwok-Fung Tang, Jialin Liu, Paul Bennett, Junaid Ahmed, Arnold Overwijk. paper

9. Self-Supervised Contrastive Learning for Efficient User Satisfaction Prediction in Conversational Agents. NAACL2021.

Authors:Mohammad Kachuee, Hao Yuan, Young-Bum Kim, Sungjin Lee. paper

10. SOrT-ing VQA Models : Contrastive Gradient Learning for Improved Consistency. NAACL2021.

Authors:Sameer Dharur, Purva Tendulkar, Dhruv Batra, Devi Parikh, Ramprasaath R. Selvaraju. paper

11. Supporting Clustering with Contrastive Learning. NAACL2021.

Authors:Dejiao Zhang, Feng Nan, Xiaokai Wei, Shangwen Li, Henghui Zhu, Kathleen McKeown, Ramesh Nallapati, Andrew Arnold, Bing Xiang. paper

12. Understanding Hard Negatives in Noise Contrastive Estimation. NAACL2021.

Authors:Wenzheng Zhang, Karl Stratos. paper

13. Contextualized and Generalized Sentence Representations by Contrastive Self-Supervised Learning: A Case Study on Discourse Relation Analysis. NAACL2021. Authors:Hirokazu Kiyomaru, Sadao Kurohashi. paper

14. Fine-Tuning Pre-trained Language Model with Weak Supervision: A Contrastive-Regularized Self-Training Approach. NAACL2021.

Authors:Yue Yu, Simiao Zuo, Haoming Jiang, Wendi Ren, Tuo Zhao, Chao Zhang. paper

五、Language Contrastive Learning第五類

是語言模型,在這個(gè)方向上有5篇論文。

1. Distributed Representations of Words and Phrases and their Compositionality. 2013NIPS.

Authors:Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, Jeffrey Dean. Paper

2. An efficient framework for learning sentence representations.

Authors:Lajanugen Logeswaran, Honglak Lee. Paper

3. XLNet: Generalized Autoregressive Pretraining for Language Understanding.

Authors:Zhilin Yang, Zihang Dai, Yiming Yang, Jaime Carbonell, Ruslan Salakhutdinov, Quoc V. Le. Paper

4. A Mutual Information Maximization Perspective of Language Representation Learning.

Authors:Lingpeng Kong, Cyprien de Masson d'Autume, Wang Ling, Lei Yu, Zihang Dai, Dani Yogatama. Paper

5. InfoXLM: An Information-Theoretic Framework for Cross-Lingual Language Model Pre-Training.

Authors:Zewen Chi, Li Dong, Furu Wei, Nan Yang, Saksham Singhal, Wenhui Wang, Xia Song, Xian-Ling Mao, Heyan Huang, Ming Zhou. Paper

六、Graph

第六類是圖與對比學(xué)習(xí)的結(jié)合,有4項(xiàng)研究的實(shí)現(xiàn)。

1. [GraphCL] Graph Contrastive Learning with Augmentations. NIPS2020.

Authors:Yuning You, Tianlong Chen, Yongduo Sui, Ting Chen, Zhangyang Wang, Yang Shen. paper

2. Contrastive Multi-View Representation Learning on Graphs. ICML2020.

Authors:Kaveh Hassani, Amir Hosein Khasahmadi. Paper

3. [GCC] GCC: Graph Contrastive Coding for Graph Neural Network Pre-Training. KDD2020.

Authors:Jiezhong Qiu, Qibin Chen, Yuxiao Dong, Jing Zhang, Hongxia Yang, Ming Ding, Kuansan Wang, Jie Tang. Paper

4. [InfoGraph] InfoGraph: Unsupervised and Semi-supervised Graph-Level Representation Learning via Mutual Information Maximization. ICLR2020.

Authors:Fan-Yun Sun, Jordan Hoffmann, Vikas Verma, Jian Tang. Paper

七、Adversarial Learning

第七類是對抗訓(xùn)練+對比學(xué)習(xí),目前只有1篇論文。

1. Contrastive Learning with Adversarial Examples. NIPS2020.

Authors:Chih-Hui Ho, Nuno Vasconcelos. paper

八、Recommendation第八類是推薦系統(tǒng)結(jié)合對比學(xué)習(xí),解決點(diǎn)擊數(shù)據(jù)的稀疏性或增加模型的魯棒性,有3篇論文。

1. Self-Supervised Hypergraph Convolutional Networks for Session-based Recommendation. AAAI2021.

Authors:Xin Xia, Hongzhi Yin, Junliang Yu, Qinyong Wang, Lizhen Cui, Xiangliang Zhang. paper code

2. Self-Supervised Multi-Channel Hypergraph Convolutional Network for Social Recommendation. WWW2021. Authors:Junliang Yu, Hongzhi Yin, Jundong Li, Qinyong Wang, Nguyen Quoc Viet Hung, Xiangliang Zhang. paper code

3. Self-supervised Graph Learning for Recommendation. SIGIR2021.

Authors:Jiancan Wu, Xiang Wang, Fuli Feng, Xiangnan He, Liang Chen, Jianxun Lian, Xing Xie. paper code

九、Applications第九類是對比學(xué)習(xí)在圖像-圖像翻譯中的應(yīng)用,有1篇論文。

1. Contrastive Learning for Unpaired Image-to-Image Translation.

Authors:Taesung ParkAlexei A. Efros, Richard ZhangJun-Yan Zhu. paper