沒有標記數據集,如何做大模型指令微調?介紹一款有潛力的標記數據集生成模型

在構建大模型應用時,通常有兩種方式來改進效果,一種是構建外部知識庫,利用RAG來完成。但RAG并不是萬能的,對于特定領域的LLM應用,以及無需示例,就能完成特定任務等場合就需要進行微調。然而,微調本身相較于RAG來講,需要更多的算力資源和時間周期,但更大的瓶頸在于微調需要標記過的樣本數據。這對于很多企業來講,很難有這樣高質量的數據積累,他們的數據通常是未經標記的,可能是一篇一篇的文章或者規章制度,并不是以問答對的方式而存在。

為了完成微調,傳統做法就是通過人工的方式進行問答對構造,在此基礎上斯坦福研究團隊也提出了Alpaca使用GPT-4這樣的強模型模仿種子樣本生成標記數據集。

??https://arxiv.org/pdf/2402.18334??

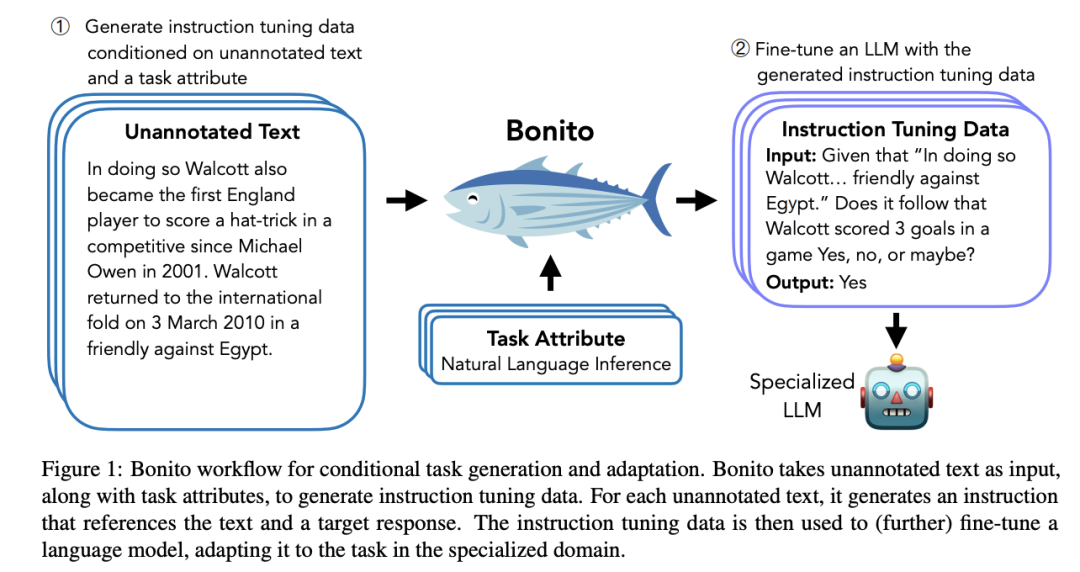

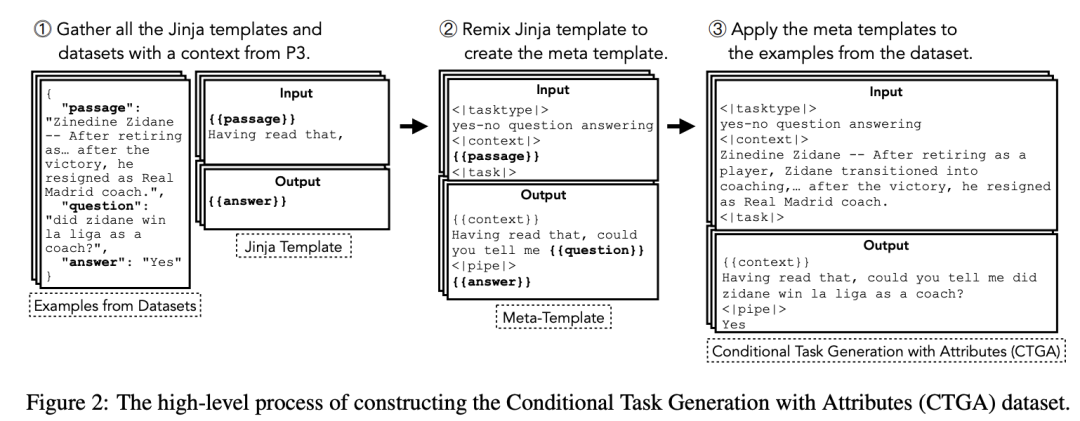

筆者介紹一個新的樣本數據生成的項目Bonito(https://github.com/BatsResearch/bonito),一個用于條件任務生成的開源模型,它可以將未標注的文本轉換為特定任務的訓練數據集,用于指令微調。根據論文介紹,該模型本身是在 mistralai/Mistral-7B-v0.1 的基礎上,利用包含 165 萬個示例的數據集(https://huggingface.co/datasets/BatsResearch/ctga-v1)進行微調,支持多種任務類型,包括多選題回答、是非題回答、自然語言推理、主題分類等。

Benito項目本身是一個數據生成的LLM應用,模型由vllm加速,使用方法比較簡單。基本過程為將文檔內容提取出來(datasets),比如PDF等,然后指定生成任務類型,并將其傳給bonito.generate_task即可。

Bonito定義:

class Bonito(LLM, AbstractBonito):

def generate_tasks(

self,

text_dataset: Dataset,

context_col: str,

task_type: str,

sampling_params: SamplingParams,

**kwargs,

):

"""

Generates tasks using the Bonito model.

This method takes a text dataset, a context column name,

a task type, and sampling parameters, and generates tasks

using the Bonito model. It processes the input dataset,

generates outputs, collects multiple generations into

one dataset object, and filters out the examples that

cannot be parsed.

Args:

text_dataset (Dataset): The dataset that provides the text

for the tasks.

context_col (str): The name of the column in the dataset

that provides the context for the tasks.

task_type (str): The type of the tasks. This can be a

short form or a full form.

sampling_params (SamplingParams): The parameters for

sampling.

**kwargs: Additional keyword arguments.

Returns:

Dataset: The synthetic dataset with the generated tasks.

"""

processed_dataset = self._prepare_bonito_input(

text_dataset, task_type, context_col, **kwargs

)

outputs = self.generate(processed_dataset["input"], sampling_params)

# collect multiple generations into one dataset object

examples = []

for i, example in enumerate(text_dataset.to_list()):

for output in outputs[i].outputs:

examples.append(

{"context": example[context_col], "prediction": output.text.strip()}

)

synthetic_dataset = Dataset.from_list(examples)

# filter out the examples that cannot be parsed

synthetic_dataset = self._postprocess_dataset(

synthetic_dataset, context_col="context", **kwargs

)

return synthetic_dataset基本使用:

from bonito import Bonito

from vllm import SamplingParams

from datasets import load_dataset

# Initialize the Bonito model

bonito = Bonito("BatsResearch/bonito-v1")

# load dataset with unannotated text

unannotated_text = load_dataset(

"BatsResearch/bonito-experiment",

"unannotated_contract_nli"

)["train"].select(range(10))

# Generate synthetic instruction tuning dataset

sampling_params = SamplingParams(max_tokens=256, top_p=0.95, temperature=0.5, n=1)

synthetic_dataset = bonito.generate_tasks(

unannotated_text,

context_col="input",

task_type="nli",

sampling_params=sampling_params

)如果想要在顯存較小的GPU上運行,如T4,可對模型進行量化。

from typing import Optional, List, Dict

from datasets import Dataset

from awq import AutoAWQForCausalLM

from bonito import AbstractBonito

from transformers import AutoTokenizer

class QuantizedBonito(AbstractBonito):

def __init__(self, model_name_or_path):

self.model = AutoAWQForCausalLM.from_quantized(model_name_or_path, fuse_layers=True).cuda()

self.tokenizer = AutoTokenizer.from_pretrained(model_name_or_path)

def generate_task(

self,

unannotated_paragraph: str,

task_type: str,

sampling_params: dict,

) -> Dict:

"""

Generates synthetic instruction tuning pair using the Quantized Bonito model.

This method takes a text unannotated text, a task type, and sampling parameters,

and generates synthetic input-output pair.

Args:

unannotated_paragraph (str): The unannotated text or a paragraph

task_type (str): The type of the tasks. This can be a

short form or a full form.

sampling_params (dict): The parameters for

sampling.

**kwargs: Additional keyword arguments.

Returns:

Dict: The synthetic input-output pair for the task type.

"""

text_dataset = Dataset.from_list([{"input": unannotated_paragraph}])

processed_dataset = self._prepare_bonito_input(

text_dataset, task_type, context_col="input"

)

outputs = self._generate_text(processed_dataset["input"], sampling_params)

examples = []

for i, example in enumerate(text_dataset.to_list()):

output = outputs[i]

example["prediction"] = output.strip()

examples.append(example)

synthetic_dataset = Dataset.from_list(examples)

# filter out the examples that cannot be parsed

synthetic_dataset_dict = self._postprocess_dataset(

synthetic_dataset, context_col="input"

).to_list()[0]

return synthetic_dataset_dict

def _generate_text(

self,

dataset: Dataset,

sampling_params: dict,

) -> List[str]:

"""

Generate text using huggingface transformers generate function.

This method takes a dataset of prompts, encodes them,

generates text using the model, decodes the generated

text, and appends it to a list.

Args:

dataset (Dataset): A dataset containing prompts for text generation.

sampling_params (dict): Parameters for sampling during generation.

Returns:

List[str]: A list of generated texts corresponding to the prompts.

"""

generated_texts = []

for prompt in dataset:

input_ids = self.tokenizer.encode(prompt, return_tensors="pt")

input_ids = input_ids.cuda()

output = self.model.generate(

input_ids,

do_sample=True,

**sampling_params

)

generated_text = self.tokenizer.decode(output[0][len(input_ids[0]):], skip_special_tokens=True)

generated_texts.append(generated_text)

return generated_texts以tasktype為ynqa,即yes-or-no問題為例,其生成的結果如下:

sampling_params = {'max_new_tokens':256, 'top_p':0.95, 'temperature':0.7, 'num_return_sequences':1}

synthetic_dataset = bonito.generate_task(

unannotated_paragraph,

task_type="ynqa",

sampling_params=sampling_params

)

pprint("----Generated Instructions----")

pprint(f'Input: {synthetic_dataset["input"]}')

pprint(f'Output: {synthetic_dataset["output"]}')

'----Generated Instructions----'

('Input: Based on the following passage, is a written communication '

'confidential? 1. “Confidential Information”, whenever used in this '

'Agreement, shall mean any data, document, specification and other '

'information or material, that is delivered or disclosed by UNHCR to the '

'Recipient in any form whatsoever, whether orally, visually in writing or '

'otherwise (including computerized form), and that, at the time of disclosure '

'to the Recipient, is designated as confidential.')

'Output: Yes'其中,tasktype支持的任務類型如下:

- 提取式問答(exqa):根據給定的文本片段生成問題答案,直接從文本中提取答案。

- 多選問題回答(mcqa):提供一組多選問題的答案。

- 問題生成(qg):根據提供的文本內容創建問題。

- 無選擇問答(qa):在不提供多項選擇選項的情況下回答問題。

- 是-否問題回答(ynqa):生成問題的是或否答案。

- 共指消解 (coref):標識文本中引用同一實體的引用。

- 釋義生成 (paraphrase):重寫具有不同措辭的句子或短語,同時保留原意。

- 釋義識別 (paraphrase_id):確定兩個句子或短語是否傳達相同的含義。

- 句子補全(sent_comp):補全句子中缺失的部分。

- 情感分析 (sentiment):識別文本中表達的情緒,如積極、消極或中性。

- 摘要(summarization):將較長的文本濃縮成較短的摘要,抓住要點。

- 文本生成(Text_gen):基于提示創建連貫且與上下文相關的文本。

- 主題分類(Topic_class):將文本分類為預定義的主題。

- 詞義消歧(wsd):根據上下文確定單詞的含義。

- 文本蘊含(te):預測一個給定的文本是否在邏輯上遵循另一個文本。

- 自然語言推理(nli):確定兩段文本之間的關系,如矛盾、隱含或中性。

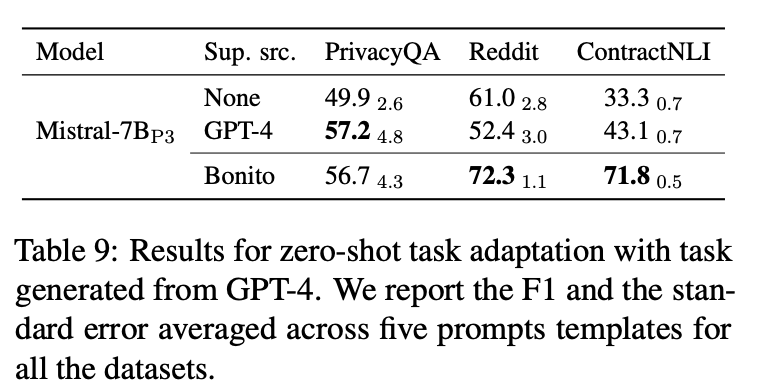

在性能上,相較于GPT-4的方案,bonito在三個數據集中兩個上取得了超越GPT4的好成績。

小結:

相較于使用GPT-4生成標記樣本的方法,經過專門面向數據集生成微調的模型Bonito來講,支持zero-shot級別的樣本生成,并且可以使用開源的模型,這在開放性,成本、性能上都能具備較強的優勢。

隨著微調技術的不斷普及,相信數據樣本質量和生產成本將受到越來越多的重視,benito等這樣的數據集生成模型也將迎來更大的發展。

本文轉載自?? AI工程化??,作者: ully