UCL汪軍呼吁創新:后ChatGPT通用人工智能理論及其應用

*本文原為英文寫作,中文翻譯由 ChatGPT 完成,原貌呈現,少數歧義處標注更正(紅色黃色部分)。英文原稿見附錄。筆者發現 ChatGPT 翻譯不妥處,往往是本人才疏英文原稿表達不夠流暢,感興趣的讀者請對照閱讀。

ChatGPT 最近引起了研究界、商業界和普通公眾的關注。它是一個通用的聊天機器人,可以回答用戶的開放式提示或問題。人們對它卓越的、類似于人類的語言技能產生了好奇心,它能夠提供連貫、一致和結構良好的回答。由于擁有一個大型的預訓練生成式語言模型,它的多輪對話交互支持各種基于文本和代碼的任務,包括新穎創作、文字游戲和甚至通過代碼生成進行機器人操縱。這使得公眾相信通用機器學習和機器理解很快就能實現。

如果深入挖掘,人們可能會發現,當編程代碼被添加為訓練數據時,模型達到特定規模時,某些推理能力、常識理解甚至思維鏈(一系列中間推理步驟)可能涌現出來。雖然這個新發現令人興奮,為人工智能研究和應用開辟了新的可能性,但它引發的問題比解決的問題更多。例如,這些新興涌現的能力能否作為高級智能的早期指標,或者它們只是幼稚模仿人類行為?繼續擴展已經龐大的模型能否導致通用人工智能(AGI)的誕生,還是這些模型只是表面上具有受限能力的人工智能?如果這些問題得到回答,可能會引起人工智能理論和應用的根本性轉變。

因此,我們敦促不僅要復制 ChatGPT 的成功,更重要的是在以下人工智能領域推動開創性研究和新的應用開發(這并不是詳盡列表):

1.新的機器學習理論,超越了基于任務的特定機器學習的既定范式

歸納推理是一種推理類型,我們根據過去的觀察來得出關于世界的結論。機器學習可以被松散地看作是歸納推理,因為它利用過去(訓練)數據來提高在新任務上的表現。以機器翻譯為例,典型的機器學習流程包括以下四個主要步驟:

1.定義具體問題,例如需要將英語句子翻譯成中文:E → C,

2.收集數據,例如句子對 {E → C},

3.訓練模型,例如使用輸入 {E} 和輸出 {C} 的深度神經網絡,

4.將模型應用于未知數據點,例如輸入一個新的英語句子 E',輸出中文翻譯 C' 并評估結果。

如上所示,傳統機器學習將每個特定任務的訓練隔離開來。因此,對于每個新任務,必須從步驟 1 到步驟 4 重置并重新執行該過程,失去了來自先前任務的所有已獲得的知識(數據、模型等)。例如,如果要將法語翻譯成中文,則需要不同的模型。

在這種范式下,機器學習理論家的工作主要集中在理解學習模型從訓練數據到未見測試數據的泛化能力。例如,一個常見的問題是在訓練中需要多少樣本才能實現預測未見測試數據的某個誤差界限。我們知道,歸納偏差偏置(即先驗知識或先驗假設)是學習模型預測其未遇到的輸出所必需的。這是因為在未知情況下的輸出值完全是任意的,如果不進行一定的假設,就不可能解決這個問題。著名的沒有免費午餐定理進一步說明,任何歸納偏差都有局限性;它只適用于某些問題組,如果所假設的先驗知識不正確,它可能在其他地方失敗。

圖 1 ChatGPT 用于機器翻譯的屏幕截圖。用戶提示信息僅包含說明,無需演示示例。

雖然上述理論仍然適用,但基礎語言模型的出現可能改變了我們對機器學習的方法。新的機器學習流程可以如下(以機器翻譯問題為例,見圖 1):

1.API 訪問其他人訓練的基礎語言模型,例如訓練有包括英語 / 中文配對語料在內的多樣文檔的模型。

2.根據少量示例或沒有示例,為手頭任務設計合適的文本描述(稱為提示),例如提示Prompt = {幾個示例 E ? C}。

3.在提示和給定的新測試數據點的條件下,語言模型生成答案,例如將 E’ 追加到提示中并從模型中生成 C’。

4.將答案解釋為預測結果。

如步驟 1 所示,基礎語言模型作為一個通用一刀切的知識庫。步驟 2 中提供的提示和上下文使基礎語言模型可以根據少量演示實例自定義以解決特定的目標或問題。雖然上述流程主要局限于基于文本的問題,但可以合理地假設,隨著跨模態(見第 3 節)基礎預訓練模型的發展,它將成為機器學習的標準。這可能會打破必要的任務障礙,為通用人工智能(AGI) 鋪平道路。

但是,確定提示文本中演示示例的操作方式仍處于早期階段。從一些早期的工作中,我們現在理解到,演示樣本的格式比標簽的正確性更重要(例如,如圖 1 所示,我們不需要提供翻譯示例,但只需要提供語言說明),但它的可適應性是否有理論上的限制,如 “沒有免費的午餐” 定理所述?提示中陳述的上下文和指令式的知識能否集成到模型中以供未來使用?這些問題只是開始探討。因此,我們呼吁對這種新形式的上下文學習及其理論限制和性質進行新的理解和新的原則,例如研究泛化的界限在哪里。

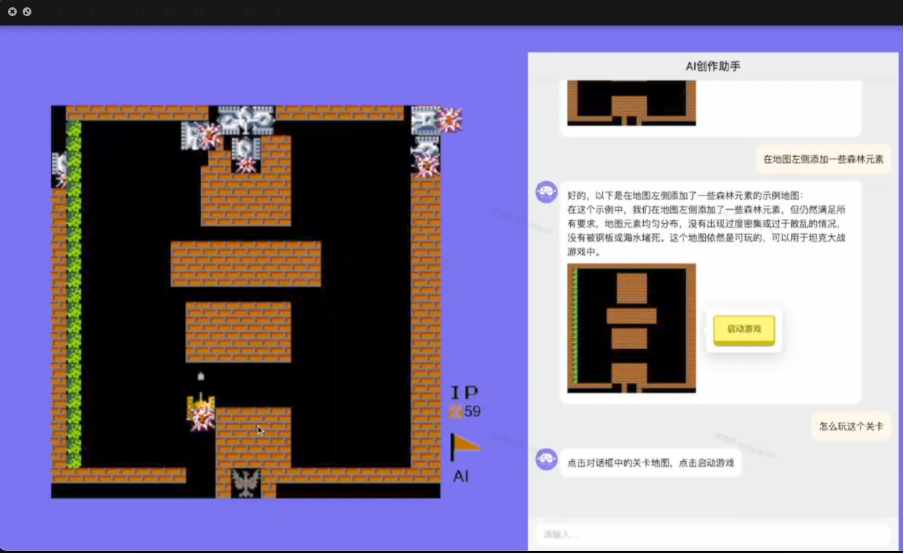

圖 2 人工智能決策生成(AIGA)用于設計計算機游戲的插圖。

2.磨練推理技能

我們正處于一個令人興奮的時代邊緣,在這個時代里,我們所有的語言和行為數據都可以被挖掘出來,用于訓練(并被巨大的計算機化模型吸收)。這是一個巨大的成就,因為我們整個集體的經驗和文明都可以消化成一個(隱藏的)知識庫(以人工神經網絡的形式),以供日后使用。實際上,ChatGPT 和大型基礎模型被認為展示了某種形式的推理能力,甚至可能在某種程度上理解他人的心態(心智理論)。這是通過數據擬合(將掩碼語言標記預測作為訓練信號)和模仿(人類行為)來實現的。然而,這種完全基于數據驅動的策略是否會帶來更大的智能還有待商榷。

為了說明這個觀點,以指導一個代理(智能體)如何下棋為例。即使代理(智能體)可以訪問無限量的人類下棋數據,僅通過模仿現有策略來生成比已有數據更優的新策略將是非常困難的。但是,使用這些數據,可以建立對世界的理解(例如,游戲規則),并將其用于 “思考”(在其大腦中構建一個模擬器,以收集反饋來創建更優的策略)。這突顯了歸納偏置的重要性;與其單純地采用蠻力方法,要求學習代理(智能體)具有一定的世界模型以便自我改進。

因此,迫切需要深入研究和理解基礎模型的新興能力。除了語言技能,我們主張通過研究底層機制來獲得實際推理能力。一個有前途的方法是從神經科學和腦科學中汲取靈感,以解密人類推理的機制,并推進語言模型的發展。同時,建立一個扎實的心智理論可能也需要深入了解多智能體學習及其基本原理。

3.從 AI 生成內容(AIGC)到 AI 生成行動(AIGA)

人類語言所發展出的隱式語義對于基礎語言模型來說至關重要。如何利用它是通用機器學習的一個關鍵話題。例如,一旦語義空間與其他媒體(如照片、視頻和聲音)或其他形式的人類和機器行為數據(如機器人軌跡 / 動作)對齊,我們就可以無需額外成本地為它們獲得語義解釋能力。這樣,機器學習(預測、生成和決策)就會變得通用和可分解。然而,處理跨模態對齊是我們面臨的一個重大難題,因為標注關系需要耗費大量的人力。此外,當許多利益方存在沖突時,人類價值觀的對齊變得困難。

ChatGPT 的一個基本缺點是它只能直接與人類進行交流。然而,一旦與外部世界建立了足夠的對齊,基礎語言模型應該能夠學習如何與各種各樣的參與者和環境進行交互。這很重要,因為它將賦予其推理能力和基于語言的語義更廣泛的應用和能力,超越了僅僅進行對話。例如,它可以發展成為一個通用代理(智能體),能夠瀏覽互聯網、控制計算機和操縱機器人。因此,更加重要的是實施確保代理(智能體)的響應(通常以生成的操作形式)安全、可靠、無偏和可信的程序。

圖 2 展示了 AIGA 與游戲引擎交互的示例,以自動化設計電子游戲的過程。

4.多智能體與基礎語言模型交互的理論

ChatGPT 使用上下文學習和提示工程來在單個會話中驅動與人的多輪對話,即給定問題或提示,整個先前的對話(問題和回答)被發送到系統作為額外的上下文來構建響應。這是一個簡單的對話驅動的馬爾可夫決策過程(MDP)模型:

{狀態 = 上下文,行動 = 響應,獎勵 = 贊 / 踩評級}。

雖然有效,但這種策略具有以下缺點:首先,提示只是提供了用戶響應的描述,但用戶真正的意圖可能沒有被明確說明,必須被推斷。也許一個強大的模型,如之前針對對話機器人提出的部分可觀察馬爾可夫決策過程(POMDP),可以準確地建模隱藏的用戶意圖。

其次,ChatGPT 首先以擬合語言的生成為目標使用語言適應性進行訓練,然后使用人類標簽進行對話目標的訓練 / 微調。由于平臺的開放性質,實際用戶的目標和目的可能與訓練 / 微調的獎勵不一致。為了檢查人類和代理(智能體)之間的均衡和利益沖突,使用博弈論的視角可能是值得的。

5.新型應用

正如 ChatGPT 所證明的那樣,我們相信基礎語言模型具有兩個獨特的特點,它們將成為未來機器學習和基礎語言模型應用的推動力。第一個是其優越的語言技能,而第二個是其嵌入的語義和早期推理能力(以人類語言形式存在)。作為接口,前者將極大地降低應用機器學習的入門門檻,而后者將顯著地推廣機器學習的應用范圍。

如第 1 部分介紹的新學習流程所示,提示和上下文學習消除了數據工程的瓶頸以及構建和訓練模型所需的工作量。此外,利用推理能力可以使我們自動分解和解決困難任務的每個子任務。因此,它將大大改變許多行業和應用領域。在互聯網企業中,基于對話的界面是網絡和移動搜索、推薦系統和廣告的明顯應用。然而,由于我們習慣于基于關鍵字的 URL 倒排索引搜索系統,改變并不容易。人們必須被重新教導使用更長的查詢和自然語言作為查詢。此外,基礎語言模型通常是刻板和不靈活的。它們缺乏關于最近事件的當前信息。它們通常會幻想事實,并不提供檢索能力和驗證。因此,我們需要一種能夠隨著時間動態演化的即時基礎模型。

因此,我們呼吁開發新的應用程序,包括但不限于以下領域:

- 創新

新穎的提示工程、流程和軟件支持。 - 基于模型的網絡搜索、推薦和廣告生成;面向對話廣告的新商業模式。

- 針對基于對話的 IT 服務、軟件系統、無線通信(個性化消息系統)和客戶服務系統的技術。

- 從基礎語言模型生成機器人流程自動化(RPA)和軟件測試和驗證。

- AI 輔助編程。

- 面向創意產業的新型內容生成工具。

- 將語言模型與運籌學

運營研究、企業智能和優化統一起來。 - 在云計算中高效且具有成本效益地服務大型基礎模型的方法。

- 針對強化學習、多智能體學習和其他人工智能決策

制定領域的基礎模型。 - 語言輔助機器人技術。

- 針對組合優化、電子設計自動化(EDA) 和芯片設計的基礎模型和推理。

作者簡介

汪軍,倫敦大學學院(UCL)計算機系教授,上海數字大腦研究院聯合創始人、院長,主要研究決策智能及大模型相關,包括機器學習、強化學習、多智能體,數據挖掘、計算廣告學、推薦系統等。已發表 200 多篇學術論文,出版兩本學術專著,多次獲得最佳論文獎,并帶領團隊研發出全球首個多智能體決策大模型和全球第一梯隊的多模態決策大模型。

Appendix:

Call for Innovation: Post-ChatGPT Theories of Artificial General Intelligence and Their Applications

ChatGPT has recently caught the eye of the research community, the commercial sector, and the general public. It is a generic chatbot that can respond to open-ended prompts or questions from users. Curiosity is piqued by its superior and human-like language skills delivering coherent, consistent, and well-structured responses. Its multi-turn dialogue interaction supports a wide range of text and code-based tasks, including novel creation, letter composition, textual gameplay, and even robot manipulation through code generation, thanks to a large pre-trained generative language model. This gives the public faith that generalist machine learning and machine understanding are achievable very soon.

If one were to dig deeper, they may discover that when programming code is added as training data, certain reasoning abilities, common sense understanding, and even chain of thought (a series of intermediate reasoning steps) may appear as emergent abilities [1] when models reach a particular size. While the new finding is exciting and opens up new possibilities for AI research and applications, it, however, provokes more questions than it resolves. Can these emergent abilities, for example, serve as an early indicator of higher intelligence, or are they simply naive mimicry of human behaviour hidden by data? Would continuing the expansion of already enormous models lead to the birth of artificial general intelligence (AGI), or are these models simply superficially intelligent with constrained capability? If answered, these questions may lead to fundamental shifts in artificial intelligence theory and applications.

We therefore urge not just replicating ChatGPT’s successes but most importantly, pushing forward ground-breaking research and novel application development in the following areas of artificial intelligence (by no means an exhaustive list):

1.New machine learning theory that goes beyond the established paradigm of task-specific machine learning

Inductive reasoning is a type of reasoning in which we draw conclusions about the world based on past observations. Machine learning can be loosely regarded as inductive reasoning in the sense that it leverages past (training) data to improve performance on new tasks. Taking machine translation as an example, a typical machine learning pipeline involves the following four major steps:

1.define the specific problem, e.g., translating English sentences to Chinese: E→C,

2.collect the data, e.g., sentence pairs { E→C },

3.train a model, e.g., a deep neural network with inputs {E} and outputs {C},

4.apply the model to an unseen data point, e.g., input a new English sentence E’ and output a Chinese translation C’ and evaluate the result.

As shown above, traditional machine learning isolates the training for each specific task. Hence, for each new task, one must reset and redo the process from step 1 to step 4, losing all acquired knowledge (data, models, etc.) from previous tasks. For instance, you would need a different model if you want to translate French into Chinese, rather than English to Chinese.

Under this paradigm, the job of machine learning theorists is focused chiefly on understanding the generalisation ability of a learning model from the training data to the unseen test data [2, 3]. For instance, a common question would be how many samples we need in training to achieve a certain error bound of predicting unseen test data. We know that inductive bias (i.e.prior knowledge or prior assumption) is required for a learning model to predict outputs that it has not encountered. This is because the output value in unknown circumstances is completely arbitrary, making it impossible to address the problem without making certain assumptions. The celebrated no-free-lunch theorem [5] further says that any inductive bias has a limitation; it is only suitable for a certain group of problems, and it may fail elsewhere if the prior knowledge assumed is incorrect.

Figure 1 A screenshot of ChatGPT used for machine translation. The prompt contains instruction only, and no demonstration example is necessary.

While the above theories still hold, the arrival of foundation language models may have altered our approach to machine learning. The new machine learning pipeline could be the following (using the same machine translation problem as an example; see Figure 1):

1.API access to a foundation language model trained elsewhere by others, e.g., a model trained with diverse documents, including paring corpus of English/Chinese,

2.with a few examples or no example at all, design a suitable text description (known as a prompt) for the task at hand, e.g., Prompt = {a few examples E→C },

3.conditioned on the prompt and a given new test data point, the language model generates the answer, e.g., append E’ to the prompt and generate C’ from the model,

4.interpret the answer as the predicted result.

As shown in step 1, the foundation language model serves as a one-size-fits-all knowledge repository. The prompt (and context) presented in step 2 allow the foundation language model to be customised to a specific goal or problem with only a few demonstration instances. While the aforementioned pipeline is primarily limited to text-based problems, it is reasonable to assume that, as the development of cross-modality (see Section 3) foundation pre-trained models continues, it will become the standard for machine learning in general. This could break down the necessary task barriers to pave the way for AGI.

But, it is still early in the process of determining how the demonstration examples in a prompt text operate. Empirically, we now understand, from some early work [2], that the format of demonstration samples is more significant than the correctness of the labels (for instance, as illustrated in Figure 1, we don’t need to provide example translation but are required to provide language instruction), but are there any theoretical limits to its adaptability as stated in the no-free-lunch theorem? Can the context and instruction-based knowledge stated in prompts (step 2) be integrated into the model for future usage? We're only scratching the surface with these inquiries. We therefore call for a new understanding and new principles behind this new form of in-context learning and its theoretical limitations and properties, such as generalisation bounds.

Figure 2 An illustration of AIGA for designing computer games.

2.Developing reasoning skills

We are on the edge of an exciting era in which all our linguistic and behavioural data can be mined to train (and be absorbed by) an enormous computerised model. It is a tremendous accomplishment as our whole collective experience and civilisation could be digested into a single (hidden) knowledge base (in the form of artificial neural networks) for later use. In fact, ChatGPT and large foundation models are said to demonstrate some form of reasoning capacity. They may even arguably grasp the mental states of others to some extent (theory of mind) [6]. This is accomplished by data fitting (predicting masked language tokens as training signals) and imitation (of human behaviours). Yet, it is debatable if this entirely data-driven strategy will bring us greater intelligence.

To illustrate this notion, consider instructing an agent how to play chess as an example. Even if the agent has access to a limitless amount of human play data, it will be very difficult for it, by only imitating existing policies, to generate new policies that are more optimal than those already present in the data. Using the data, one can, however, develop an understanding of the world (e.g., the rules of the game) and use it to “think” (construct a simulator in its brain to gather feedback in order to create more optimal policies). This highlights the importance of inductive bias; rather than simple brute force, a learning agent is demanded to have some model of the world and infer it from the data in order to improve itself.

Thus, there is an urgent need to thoroughly investigate and understand the emerging capabilities of foundation models. Apart from language skills, we advocate research into acquiring of actual reasoning ability by investigating the underlying mechanisms [9]. One promising approach would be to draw inspiration from neuroscience and brain science to decipher the mechanics of human reasoning and advance language model development. At the same time, building a solid theory of mind may also necessitate an in-depth knowledge of multiagent learning [10,11] and its underlying principles.

3.From AI Generating Content (AIGC) to AI Generating Action (AIGA)

The implicit semantics developed on top of human languages is integral to foundation language models. How to utilise it is a crucial topic for generalist machine learning. For example, once the semantic space is aligned with other media (such as photos, videos, and sounds) or other forms of data from human and machine behaviours, such as robotic trajectory/actions, we acquire semantic interpretation power for them with no additional cost [7, 14]. In this manner, machine learning (prediction, generation, and decision-making) would be generic and decomposable. Yet, dealing with cross-modality alignment is a substantial hurdle for us due to the labour-intensive nature of labelling the relationships. Additionally, human value alignment becomes difficult when numerous parties have conflicting interests.

A fundamental drawback of ChatGPT is that it can communicate directly with humans only. Yet, once a sufficient alignment with the external world has been established, foundation language models should be able to learn how to interact with various parties and environments [7, 14]. This is significant because it will bestow its power on reasoning ability and semantics based on language for broader applications and capabilities beyond conversation. For instance, it may evolve into a generalist agent capable of navigating the Internet [7], controlling computers [13], and manipulating robots [12]. Thus, it becomes more important to implement procedures that ensure responses from the agent (often in the form of generated actions) are secure, reliable, unbiased, and trustworthy.

Figure 2 provides a demonstration of AIGA [7] for interacting with a game engine to automate the process of designing a video game.

4.Multiagent theories of interactions with foundation language models

ChatGPT uses in-context learning and prompt engineering to drive multi-turn dialogue with people in a single session, i.e., given the question or prompt, the entire prior conversation (questions and responses) is sent to the system as extra context to construct the response. It is a straightforward Markov decision process (MDP) model for conversation:

{State = context, Action = response, Reward = thumbs up/down rating}.

While effective, this strategy has the following drawbacks: first, a prompt simply provides a description of the user's response, but the user's genuine intent may not be explicitly stated and must be inferred. Perhaps a robust model, as proposed previously for conversation bots, would be a partially observable Markov decision process (POMDP) that accurately models a hidden user intent.

Second, ChatGPT is first trained using language fitness and then human labels for conversation goals. Due to the platform's open-ended nature, actual user's aim and objective may not align with the trained/fined-tuned rewards. In order to examine the equilibrium and conflicting interests of humans and agents, it may be worthwhile to use a game-theoretic perspective [9].

5.Novel applications

As proven by ChatGPT, there are two distinctive characteristics of foundation language models that we believe will be the driving force behind future machine learning and foundation language model applications. The first is its superior linguistic skills, while the second is its embedded semantics and early reasoning abilities (in the form of human language). As an interface, the former will greatly lessen the entry barrier to applied machine learning, whilst the latter will significantly generalise how machine learning is applied.

As demonstrated in the new learning pipeline presented in Section 1, prompts and in-context learning eliminate the bottleneck of data engineering and the effort required to construct and train a model. Moreover, exploiting the reasoning capabilities could enable us to automatically dissect and solve each subtask of a hard task. Hence, it will dramatically transform numerous industries and application sectors. In internet-based enterprises, the dialogue-based interface is an obvious application for web and mobile search, recommender systems, and advertising. Yet, as we are accustomed to the keyword-based URL inverted index search system, the change is not straightforward. People must be retaught to utilise longer queries and natural language as queries. In addition, foundation language models are typically rigid and inflexible. It lacks access to current information regarding recent events. They typically hallucinate facts and do not provide retrieval capabilities and verification. Thus, we need a just-in-time foundation model capable of undergoing dynamic evolution over time.

We therefore call for novel applications including but not limited to the following areas:

- Novel prompt engineering, its procedure, and software support.

- Generative and model-based web search, recommendation and advertising; novel business models for conversational advertisement.

- Techniques for dialogue-based IT services, software systems, wireless communications (personalised messaging systems) and customer service systems.

- Automation generation from foundation language models for Robotic process automation (RPA) and software test and verification.

- AI-assisted programming.

- Novel content generation tools for creative industries.

- Unifying language models with operations research and enterprise intelligence and optimisation.

- Efficient and cost-effective methods of serving large foundation models in Cloud computing.

- Foundation models for reinforcement learning and multiagent learning and, other decision-making domains.

- Language-assisted Robotics.

- Foundation models and reasoning for combinatorial optimisation, EDA and chip design.