作者 | Lokesh Joshi

譯者 | 張哲剛

審校丨Noe

簡介

當前,NodeJS擁有大量的庫,基本上可以解決所有的常規需求。網絡抓取是一項門檻較低的技術,衍生了大量自由職業者以及開發團隊。自然而然,NodeJS的庫生態系統幾乎包含了網絡解析所需的一切。

本文論述中,首先假定已經運行在應用程序皆為NodeJS解析而工作的核心設備之上。此外,我們將研究一個示例,從銷售品牌服裝和飾品的網站https://outlet.scotch-soda.com 站點中的幾百個頁面里收集數據。這些代碼示例類似于一些真實的抓取應用程序,其中一個就是在Yelp抓取中使用。

當然,由于本文研究所限,示例中刪除了一些生產組件,例如數據庫、容器化、代理連接以及進程管理工具(例如pm2)。另外,在諸如linting這類顯而易見的事務上也不會停止。

但是,我們會保證項目的基本結構完善,將使用最流行的庫(Axios,Cheerio,Lodash),使用Puppeter提取授權密鑰,使用NodeJS流抓取數據并將其寫入文件。

術語規定

本文將使用以下術語:NodeJS應用程序——服務器應用程序;網站 outlet.scotch-soda.com ——Web資源,網站服務器為Web服務器。大體來說,首先是在Chrome或Firefox中探究網站網絡資源及其頁面,然后運行一個服務器應用程序,向Web服務器發送HTTP請求,最后收到帶有相應數據的響應。

獲取授權Cookie

outlet.scotch-soda.com的內容僅對授權用戶開放。本示例中,授權將通過由服務器應用程序控制的Chromium瀏覽器實施,cookie也是從其中接收。這些Cookie將包含在每個向web服務器發出的HTTP請求上的HTTP標頭中,從而允許應用程序訪問這些授權內容。當抓取具有數萬乃至數十萬頁面的大量資源時,接收到的Cookie需要更新一些次數。

該應用程序將具有以下結構:

cookieManager.js:帶有Cookie管理器類的文件,用以負責獲取cookie;

cookie-storage.js: cookie 變量文件;

index.js:安排Cookie管理器調用點;

.env:環境變量文件。

/project_root

|__ /src

| |__ /helpers

| |__ **cookie-manager.js**

| |__ **cookie-storage.js**

|**__ .env**

|__ **index.js**

主目錄和文件結構

將以下代碼添加到應用程序中:

// index.js

// including environment variables in .env

require('dotenv').config();

const cookieManager = require('./src/helpers/cookie-manager');

const { setLocalCookie } = require('./src/helpers/cookie-storage');

// IIFE - application entry point

(async () => {

// CookieManager call point

// login/password values are stored in the .env file

const cookie = await cookieManager.fetchCookie(

process.env.LOGIN,

process.env.PASSWORD,

);

if (cookie) {

// if the cookie was received, assign it as the value of a storage variable

setLocalCookie(cookie);

} else {

console.log('Warning! Could not fetch the Cookie after 3 attempts. Aborting the process...');

// close the application with an error if it is impossible to receive the cookie

process.exit(1);

}

})();

在cookie-manager.js中:

// cookie-manager.js

// 'user-agents' generates 'User-Agent' values for HTTP headers

// 'puppeteer-extra' - wrapper for 'puppeteer' library

const _ = require('lodash');

const UserAgent = require('user-agents');

const puppeteerXtra = require('puppeteer-extra');

const StealthPlugin = require('puppeteer-extra-plugin-stealth');

// hide from webserver that it is bot

puppeteerXtra.use(StealthPlugin());

class CookieManager {

// this.browser & this.page - Chromium window and page instances

constructor() {

this.browser = null;

this.page = null;

this.cookie = null;

}

// getter

getCookie() {

return this.cookie;

}

// setter

setCookie(cookie) {

this.cookie = cookie;

}

async fetchCookie(username, password) {

// give 3 attempts to authorize and receive cookies

const attemptCount = 3;

try {

// instantiate Chromium window and blank page

this.browser = await puppeteerXtra.launch({

args: ['--window-size=1920,1080'],

headless: process.env.NODE_ENV === 'PROD',

});

// Chromium instantiates blank page and sets 'User-Agent' header

this.page = await this.browser.newPage();

await this.page.setUserAgent((new UserAgent()).toString());

for (let i = 0; i < attemptCount; i += 1) {

// Chromium asks the web server for an authorization page

//and waiting for DOM

await this.page.goto(process.env.LOGIN_PAGE, { waitUntil: ['domcontentloaded'] });

// Chromium waits and presses the country selection confirmation button

// and falling asleep for 1 second: page.waitForTimeout(1000)

await this.page.waitForSelector('#changeRegionAndLanguageBtn', { timeout: 5000 });

await this.page.click('#changeRegionAndLanguageBtn');

await this.page.waitForTimeout(1000);

// Chromium waits for a block to enter a username and password

await this.page.waitForSelector('div.login-box-content', { timeout: 5000 });

await this.page.waitForTimeout(1000);

// Chromium enters username/password and clicks on the 'Log in' button

await this.page.type('input.email-input', username);

await this.page.waitForTimeout(1000);

await this.page.type('input.password-input', password);

await this.page.waitForTimeout(1000);

await this.page.click('button[value="Log in"]');

await this.page.waitForTimeout(3000);

// Chromium waits for target content to load on 'div.main' selector

await this.page.waitForSelector('div.main', { timeout: 5000 });

// get the cookies and glue them into a string of the form <key>=<value> [; <key>=<value>]

this.setCookie(

_.join(

_.map(

await this.page.cookies(),

({ name, value }) => _.join([name, value], '='),

),

'; ',

),

);

// when the cookie has been received, break the loop

if (this.cookie) break;

}

// return cookie to call point (in index.js)

return this.getCookie();

} catch (err) {

throw new Error(err);

} finally {

// close page and browser instances

this.page && await this.page.close();

this.browser && await this.browser.close();

}

}

}

// export singleton

module.exports = new CookieManager();

某些變量的值是鏈接到.env文件的。

// .env

NODE_ENV=DEV

LOGIN_PAGE=https://outlet.scotch-soda.com/de/en/login

LOGIN=tyrell.wellick@ecorp.com

PASSWORD=i*m_on@kde

例如,配置無頭消息屬性,發送到方法 puppeteerXtra.launch解析為布爾值,它取決于狀態可變的process.env.node_env 。在開發過程中,變量被設置為DEV,無頭變量被設置為false,因此Puppeteer能夠明白它此刻應該在監視器上呈現執行Chromium 。

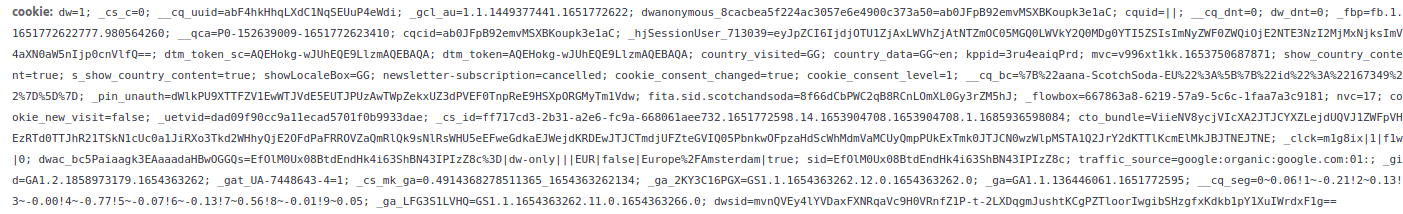

方法page.cookies返回一個對象數組,每個對象定義一個 cookie 并包含兩個屬性:名稱和值 。使用一系列 Lodash 函數,循環提取每個 cookie 的鍵值對,并生成類似于下面的字符串:

文件 cookie-storage.js:

// cookie-storage.js

// cookie storage variable

let localProcessedCookie = null;

// getter

const getLocalCookie = () => localProcessedCookie;

// setter

const setLocalCookie = (cookie) => {

localProcessedCookie = cookie;

// lock the getLocalCookie function;

// its scope with the localProcessedCookie value will be saved

// after the setLocalCookie function completes

return getLocalCookie;

};

module.exports = {

setLocalCookie,

getLocalCookie,

};

對于閉包的定義,明確的思路是:在該變量作用域內的函數結束后,保持對某個變量值的訪問。通常,當函數完成執行返回操作時,它會離開調用堆棧,垃圾回收機制會從作用域內刪除內存中的所有變量。

上面的示例中,本地cookie設置器完成設置后,應該回收的本地已處理cookie變量的值將保留在計算機的內存中。這就意味著只要應用程序在運行,它就可以在代碼中的任何地方獲取這個值。

這樣,當調用setLocalCookie時,將從中返回getLocalCookie函數。一旦這個LocalCookie函數作用域面臨回收時,NodeJS能夠看到它具有getLocalCookie閉包函數。此時,垃圾回收機制將返回的獲取器作用域內的所有變量都保留在內存中。由于可變的本地處理Cookie在getLocalCookie的作用域內,因此它將繼續存在,保持與Cookie的綁定。

URL生成器

應用程序需要一個url的主列表才能開始爬取。在生產過程中,爬取通常從Web資源的主頁開始,經過一定數量次數的迭代,最終建立一個指向登錄頁面的鏈接集合。通常,一個Web資源有成千上萬個這樣的鏈接。

在此示例中,爬取程序只會傳輸8個爬取鏈接作為輸入,鏈接指向包含著主要產品分類目錄的頁面,它們分別是:

?? https://outlet.scotch-soda.com/women/clothing??

?? https://outlet.scotch-soda.com/women/footwear??

?? https://outlet.scotch-soda.com/women/accessories/all-womens-accessories??

?? https://outlet.scotch-soda.com/men/clothing??

?? https://outlet.scotch-soda.com/men/footwear??

?? https://outlet.scotch-soda.com/men/accessories/all-mens-accessories??

?? https://outlet.scotch-soda.com/kids/girls/clothing/all-girls-clothing??

?? https://outlet.scotch-soda.com/kids/boys/clothing/all-boys-clothing??

使用這么長的鏈接字符,會影響代碼美觀性,為了避免這種情形,讓我們用下列文件創建一個短小精悍的URL構建器:

categories.js: 包含路由參數的文件;

target-builder.js: 構建url集合的文件.

/project_root

|__ /src

| |__ /constants

| | |__ **categories.js**

| |__ /helpers

| |__ cookie-manager.js

| |__ cookie-storage.js

| |__ **target-builder.js**

|**__ .env**

|__ index.js

添加以下代碼:

// .env

MAIN_PAGE=https://outlet.scotch-soda.com

// index.js

// import builder function

const getTargetUrls = require('./src/helpers/target-builder');

(async () => {

// here the proccess of getting cookie

// gets an array of url links and determines it's length L

const targetUrls = getTargetUrls();

const { length: L } = targetUrls;

})();

// categories.js

module.exports = [

'women/clothing',

'women/footwear',

'women/accessories/all-womens-accessories',

'men/clothing',

'men/footwear',

'men/accessories/all-mens-accessories',

'kids/girls/clothing/all-girls-clothing',

'kids/boys/clothing/all-boys-clothing',

];

// target-builder.js

const path = require('path');

const categories = require('../constants/categories');

// static fragment of route parameters

const staticPath = 'global/en';

// create URL object from main page address

const url = new URL(process.env.MAIN_PAGE);

// add the full string of route parameters to the URL object

// and return full url string

const addPath = (dynamicPath) => {

url.pathname = path.join(staticPath, dynamicPath);

return url.href;

};

// collect URL link from each element of the array with categories

module.exports = () => categories.map((category) => addPath(category));

這三個代碼片段構建了本段開頭給出的8個鏈接,演示了內置的URL以及路徑庫的使用。可能有人會覺得,這不是大炮打蚊子嘛!使用插值明明更簡單啊!

有明確規范的NodeJS方法用于處理路由以及URL請求參數,主要是基于以下兩個原因:

1、插值在輕量級應用下還好;

2、為了養成良好的習慣,應當每天使用。

爬網和抓取

向服務器應用程序的邏輯中心添加兩個文件:

·crawler.js:包含用于向 Web 服務器發送請求和接收網頁標記的爬網程序類;

·parser.js:包含解析器類,其中包含用于抓取標記和獲取目標數據的方法。

/project_root

|__ /src

| |__ /constants

| | |__ categories.js

| |__ /helpers

| | |__ cookie-manager.js

| | |__ cookie-storage.js

| | |__ target-builder.js

****| |__ **crawler.js**

| |__ **parser.js**

|**__** .env

|__ **index.js**

首先,添加一個循環index.js,它將依次傳遞URL鏈接到爬取程序并接收解析后的數據:

// index.js

const crawler = new Crawler();

(async () => {

// getting Cookie proccess

// and url-links array...

const { length: L } = targetUrls;

// run a loop through the length of the array of url links

for (let i = 0; i < L; i += 1) {

// call the run method of the crawler for each link

// and return parsed data

const result = await crawler.run(targetUrls[i]);

// do smth with parsed data...

}

})();

爬取代碼:

// crawler.js

require('dotenv').config();

const cheerio = require('cheerio');

const axios = require('axios').default;

const UserAgent = require('user-agents');

const Parser = require('./parser');

// getLocalCookie - closure function, returns localProcessedCookie

const { getLocalCookie } = require('./helpers/cookie-storage');

module.exports = class Crawler {

constructor() {

// create a class variable and bind it to the newly created Axios object

// with the necessary headers

this.axios = axios.create({

headers: {

cookie: getLocalCookie(),

'user-agent': (new UserAgent()).toString(),

},

});

}

async run(url) {

console.log('IScraper: working on %s', url);

try {

// do HTTP request to the web server

const { data } = await this.axios.get(url);

// create a cheerio object with nodes from html markup

const $ = cheerio.load(data);

// if the cheerio object contains nodes, run Parser

// and return to index.js the result of parsing

if ($.length) {

const p = new Parser($);

return p.parse();

}

console.log('IScraper: could not fetch or handle the page content from %s', url);

return null;

} catch (e) {

console.log('IScraper: could not fetch the page content from %s', url);

return null;

}

}

};

解析器的任務是在接收到 cheerio 對象時選擇數據,然后為每個 URL 鏈接構建以下結構:

[

{

"Title":"Graphic relaxed-fit T-shirt | Women",

"CurrentPrice":25.96,

"Currency":"€",

"isNew":false

},

{

// at all 36 such elements for every url-link

}

]

解析代碼:

// parser.js

require('dotenv').config();

const _ = require('lodash');

module.exports = class Parser {

constructor(content) {

// this.$ - this is a cheerio object parsed from the markup

this.$ = content;

this.$$ = null;

}

// The crawler calls the parse method

// extracts all 'li' elements from the content block

// and in the map loop the target data is selected

parse() {

return this.$('#js-search-result-items')

.children('li')

.map((i, el) => {

this.$$ = this.$(el);

const Title = this.getTitle();

const CurrentPrice = this.getCurrentPrice();

// if two key values are missing, such object is rejected

if (!Title || !CurrentPrice) return {};

return {

Title,

CurrentPrice,

Currency: this.getCurrency(),

isNew: this.isNew(),

};

})

.toArray();

}

// next - private methods, which are used at 'parse' method

getTitle() {

return _.replace(this.$$.find('.product__name').text().trim(), /\s{2,}/g, ' ');

}

getCurrentPrice() {

return _.toNumber(

_.replace(

_.last(_.split(this.$$.find('.product__price').text().trim(), ' ')),

',',

'.',

),

);

}

getCurrency() {

return _.head(_.split(this.$$.find('.product__price').text().trim(), ' '));

}

isNew() {

return /new/.test(_.toLower(this.$$.find('.content-asset p').text().trim()));

}

};

爬取程序和解析器運行的結果將是8個內部包含對象的數組,并傳遞回index.js文件的for循環。

流寫入文件

要寫入一個文件,須使用可寫流。流是一種JS對象,包含了許多用于處理按順序出現的數據塊的方法。所有的流都繼承自EventEmitter類(即事件觸發器),因此,它們能夠對運行環境中的突發事件做出反應。或許有人遇到過下面這種情形:

myServer.on('request', (request, response) => {

// something puts into response

});

// or

myObject.on('data', (chunk) => {

// do something with data

});這是NodeJS流的優秀范例,盡管它們的名字不那么原始:我的服務器和我的對象。在此示例中,它們偵聽某些事件:HTTP請求(事件)的到達和一段數據(事件)的到達,然后安排它們各就各位去工作。“流式傳輸”的立足之本在于它們使用片狀數據片段,并且只需要極低的運行內存。

在此情形下,for循環按順序接收 8 個包含數據的數組,這些數組將按順序寫入文件,而無需等待累積完整的集合,也無需使用任何累加器。執行示例代碼時,由于能夠得知下一部分解析數據到達for循環的確切時刻,所以無需任何偵聽,就可以使用內置于流中的方法立即寫入。

寫入到何處

/project_root

|__ /data

| |__ **data.json**

...

// index.js

const fs = require('fs');

const path = require('path');

const { createWriteStream } = require('fs');

// use constants to simplify work with addresses

const resultDirPath = path.join('./', 'data');

const resultFilePath = path.join(resultDirPath, 'data.json');

// check if the data directory exists; create if it's necessary

// if the data.json file existed - delete all data

// ...if not existed - create empty

!fs.existsSync(resultDirPath) && fs.mkdirSync(resultDirPath);

fs.writeFileSync(resultFilePath, '');

(async () => {

// getting Cookie proccess

// and url-links array...

// create a stream object for writing

// and add square bracket to the first line with a line break

const writer = createWriteStream(resultFilePath);

writer.write('[\n');

// run a loop through the length of the url-links array

for (let i = 0; i < L; i += 1) {

const result = await crawler.run(targetUrls[i]);

// if an array with parsed data is received, determine its length l

if (!_.isEmpty(result)) {

const { length: l } = result;

// using the write method, add the next portion

//of the incoming data to data.json

for (let j = 0; j < l; j += 1) {

if (i + 1 === L && j + 1 === l) {

writer.write(` ${JSON.stringify(result[j])}\n`);

} else {

writer.write(` ${JSON.stringify(result[j])},\n`);

}

}

}

}

})();

嵌套的for循環解決了一個問題:為在輸出中獲取有效的json文件,需要注意結果數組中的最后一個對象后面不要有逗號。嵌套for循環決定哪個對象是應用程序中最后一個撤消插入逗號的。

如果事先創建data/data.json并在代碼運行時打開,就可以實時看到可寫流是如何按順序添加新數據片段的。

結論

輸出結果是以下形式的JSON對象:

[

{"Title":"Graphic relaxed-fit T-shirt | Women","CurrentPrice":25.96,"Currency":"€","isNew":false},

{"Title":"Printed mercerised T-shirt | Women","CurrentPrice":29.97,"Currency":"€","isNew":true},

{"Title":"Slim-fit camisole | Women","CurrentPrice":32.46,"Currency":"€","isNew":false},

{"Title":"Graphic relaxed-fit T-shirt | Women","CurrentPrice":25.96,"Currency":"€","isNew":true},

...

{"Title":"Piped-collar polo | Boys","CurrentPrice":23.36,"Currency":"€","isNew":false},

{"Title":"Denim chino shorts | Boys","CurrentPrice":45.46,"Currency":"€","isNew":false}

]

應用程序授權處理時間約為20秒。

完整的開源項目代碼存在GitHub上。并附有依賴關系程序包package.json。

譯者介紹

張哲剛,51CTO社區編輯,系統運維工程師,國內較早一批硬件評測及互聯網從業者,曾入職阿里巴巴。十余年IT項目管理經驗,具備復合知識技能,曾參與多個網站架構設計、電子政務系統開發,主導過某地市級招生考試管理平臺運維工作。

原文標題:Web Scraping Sites With Session Cookie Authentication Using NodeJS Request

鏈接:

?? https://hackernoon.com/web-scraping-sites-with-session-cookie-authentication-using-nodejs-request??