基于波士頓住房數據集訓練簡單的MLP回歸模型

作者:小sen

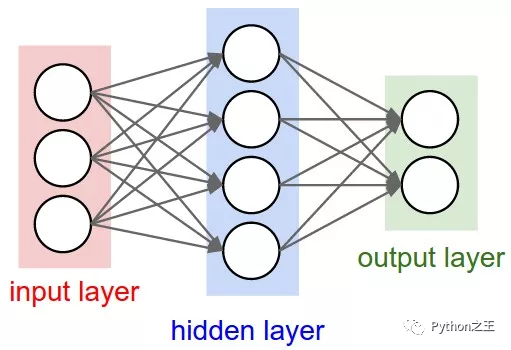

多層感知機(MLP)有著非常悠久的歷史,多層感知機(MLP)是深度神經網絡(DNN)的基礎算法,每個MLP模型由一個輸入層、幾個隱藏層和一個輸出層組成.

多層感知機(MLP)有著非常悠久的歷史,多層感知機(MLP)是深度神經網絡(DNN)的基礎算法

MLP基礎知識

- 目的:創建用于簡單回歸/分類任務的常規神經網絡(即多層感知器)和Keras

MLP結構

- 每個MLP模型由一個輸入層、幾個隱藏層和一個輸出層組成

- 每層神經元的數目不受限制

具有一個隱藏層的MLP

- 輸入神經元數:3 - 隱藏神經元數:4 - 輸出神經元數:2

回歸任務的MLP

- 當目標(「y」)連續時

- 對于損失函數和評估指標,通常使用均方誤差(MSE)

- from tensorflow.keras.datasets import boston_housing

- (X_train, y_train), (X_test, y_test) = boston_housing.load_data()

數據集描述

- 波士頓住房數據集共有506個數據實例(404個培訓和102個測試)

- 13個屬性(特征)預測“某一地點房屋的中值”

- 文件編號:https://keras.io/datasets/

1.創建模型

- Keras模型對象可以用Sequential類創建

- 一開始,模型本身是空的。它是通過「添加」附加層和編譯來完成的

- 文檔:https://keras.io/models/sequential/

- from tensorflow.keras.models import Sequential

- model = Sequential()

1-1.添加層

- Keras層可以「添加」到模型中

- 添加層就像一個接一個地堆疊樂高積木

- 文檔:https://keras.io/layers/core/

- from tensorflow.keras.layers import Activation, Dense

- # Keras model with two hidden layer with 10 neurons each

- model.add(Dense(10, input_shape = (13,))) # Input layer => input_shape should be explicitly designated

- model.add(Activation('sigmoid'))

- model.add(Dense(10)) # Hidden layer => only output dimension should be designated

- model.add(Activation('sigmoid'))

- model.add(Dense(10)) # Hidden layer => only output dimension should be designated

- model.add(Activation('sigmoid'))

- model.add(Dense(1)) # Output layer => output dimension = 1 since it is regression problem

- # This is equivalent to the above code block

- model.add(Dense(10, input_shape = (13,), activation = 'sigmoid'))

- model.add(Dense(10, activation = 'sigmoid'))

- model.add(Dense(10, activation = 'sigmoid'))

- model.add(Dense(1))

1-2.模型編譯

- Keras模型應在培訓前“編譯”

- 應指定損失類型(函數)和優化器

- 文檔(優化器):https://keras.io/optimizers/

- 文檔(損失):https://keras.io/losses/

- from tensorflow.keras import optimizers

- sgd = optimizers.SGD(lr = 0.01) # stochastic gradient descent optimizer

- model.compile(optimizer = sgd, loss = 'mean_squared_error', metrics = ['mse']) # for regression problems, mean squared error (MSE) is often employed

模型摘要

- model.summary()

- odel: "sequential"

- _________________________________________________________________

- Layer (type) Output Shape Param #

- =================================================================

- dense (Dense) (None, 10) 140

- _________________________________________________________________

- activation (Activation) (None, 10) 0

- _________________________________________________________________

- dense_1 (Dense) (None, 10) 110

- _________________________________________________________________

- activation_1 (Activation) (None, 10) 0

- _________________________________________________________________

- dense_2 (Dense) (None, 10) 110

- _________________________________________________________________

- activation_2 (Activation) (None, 10) 0

- _________________________________________________________________

- dense_3 (Dense) (None, 1) 11

- _________________________________________________________________

- dense_4 (Dense) (None, 10) 20

- _________________________________________________________________

- dense_5 (Dense) (None, 10) 110

- _________________________________________________________________

- dense_6 (Dense) (None, 10) 110

- _________________________________________________________________

- dense_7 (Dense) (None, 1) 11

- =================================================================

- Total params: 622

- Trainable params: 622

- Non-trainable params: 0

- _________________________________________________________________

2.培訓

- 使用提供的訓練數據訓練模型

- model.fit(X_train, y_train, batch_size = 50, epochs = 100, verbose = 1)

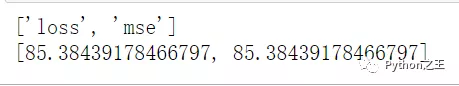

3.評估

- Keras模型可以用evaluate()函數計算

- 評估結果包含在列表中

- 文檔:https://keras.io/metrics/

- results = model.evaluate(X_test, y_test)

- print(model.metrics_names) # list of metric names the model is employing

- print(results) # actual figure of metrics computed

- print('loss: ', results[0])

- print('mse: ', results[1])

責任編輯:姜華

來源:

Python之王